How to Fine-tune Mixtral 8x7b with Open-source Ludwig - Predibase - Predibase

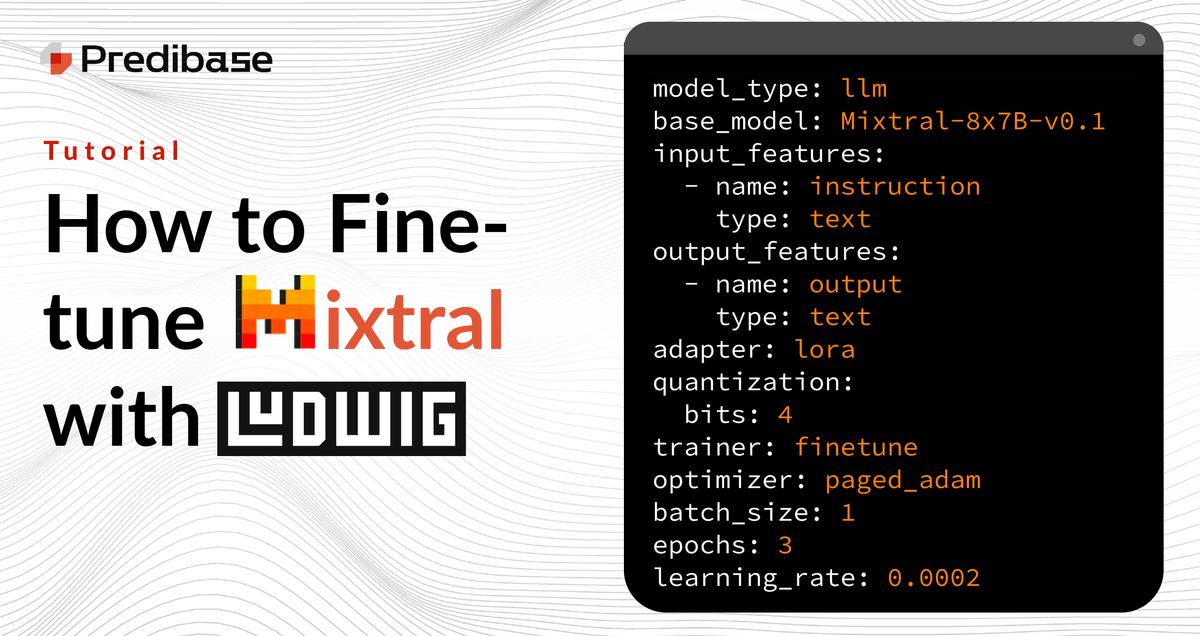

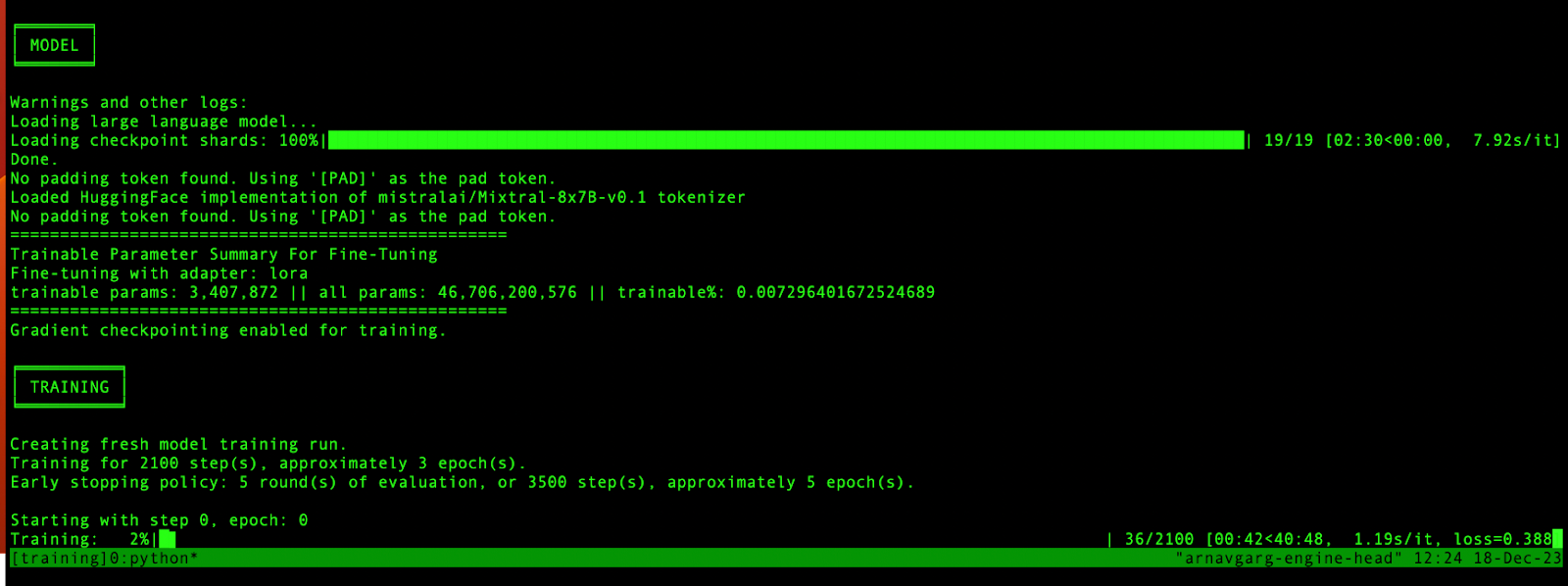

Learn how to reliably and efficiently fine-tune Mixtral 8x7B on commodity hardware in just a few lines of code with Ludwig, the open-source framework for building custom LLMs. This short tutorial provides code snippets to help get you started.

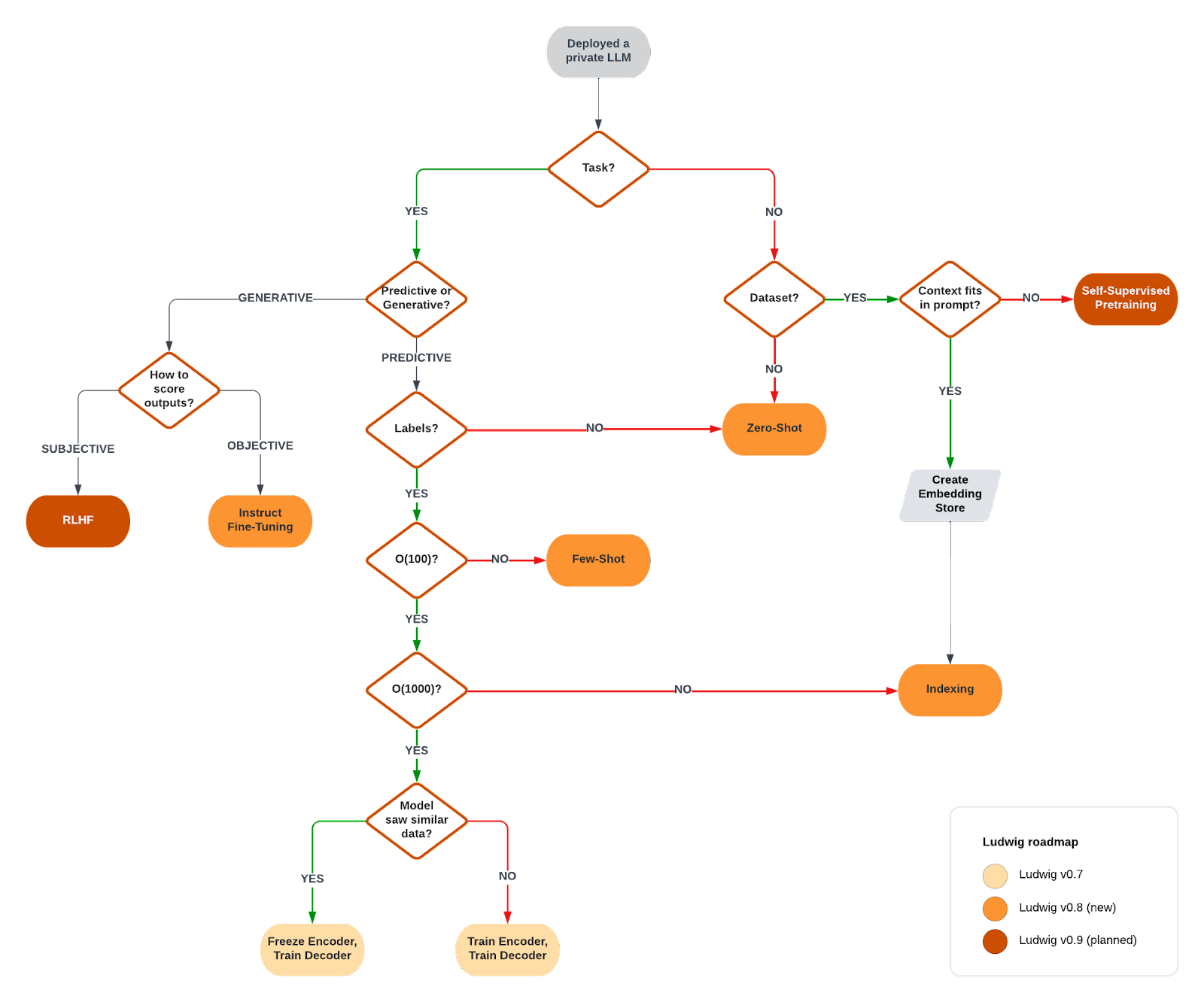

Ludwig v0.8: Open-source Toolkit to Build and Fine-tune Custom LLMs on Your Data - Predibase - Predibase

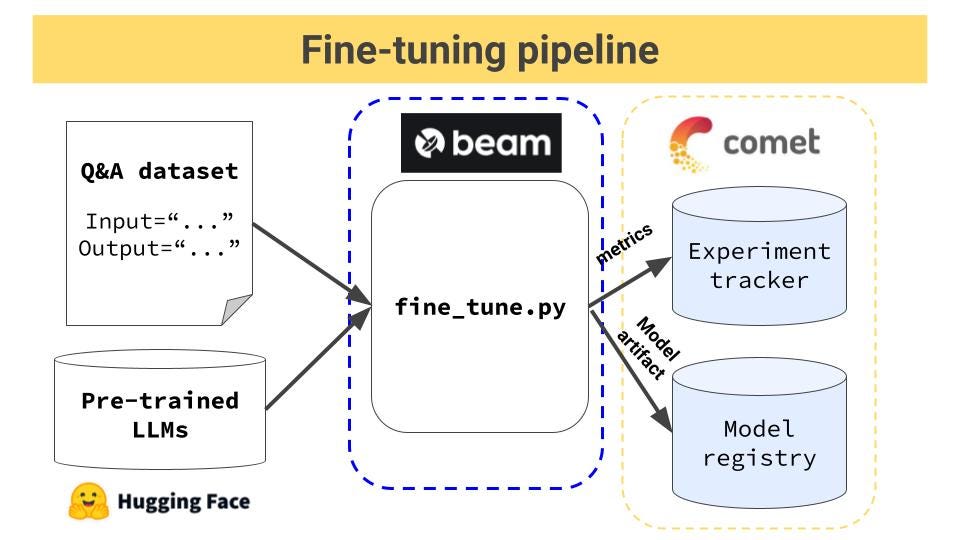

Fine-tuning Example

How to Fine-tune Mixtral 8x7b with Open-source Ludwig - Predibase - Predibase

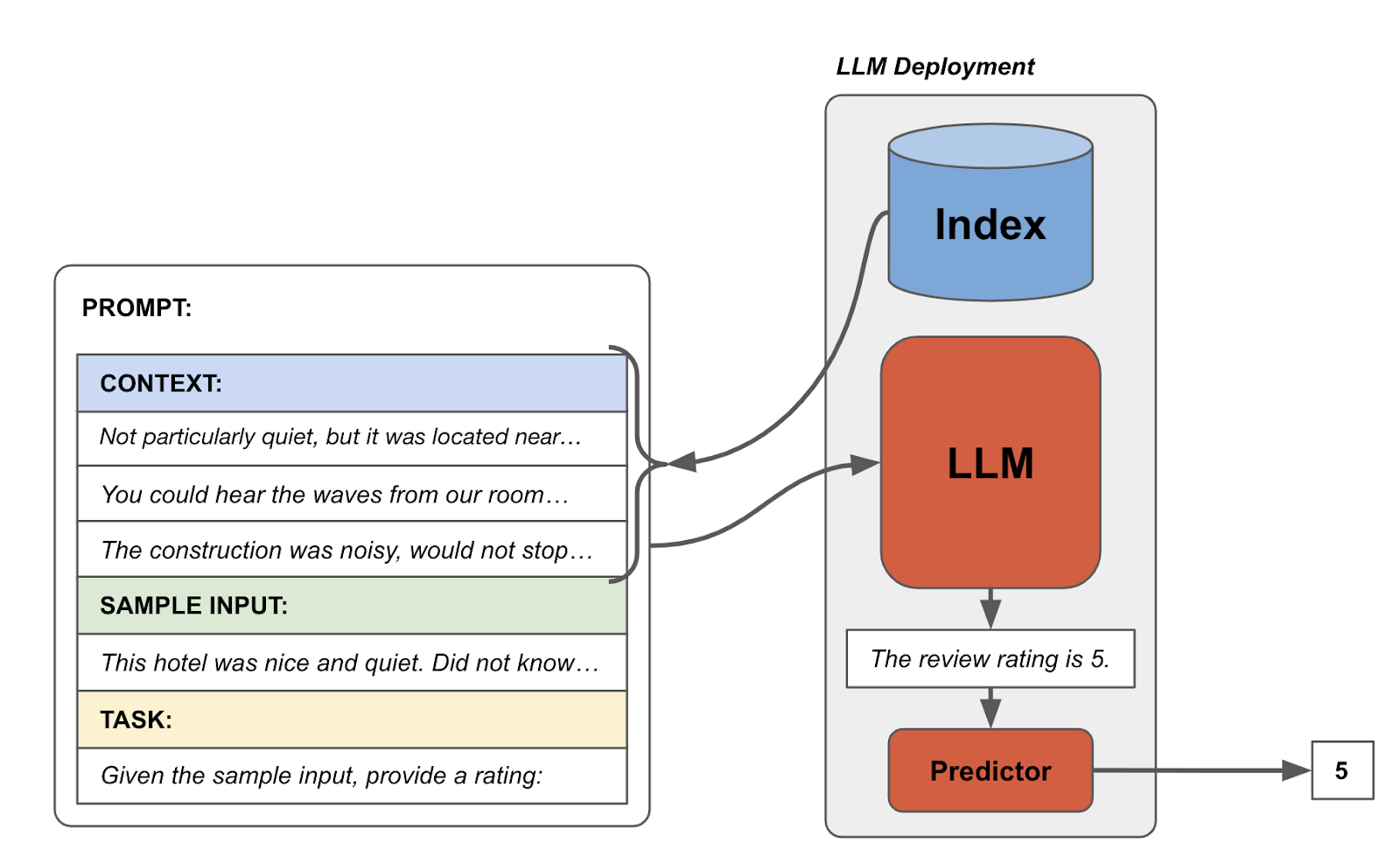

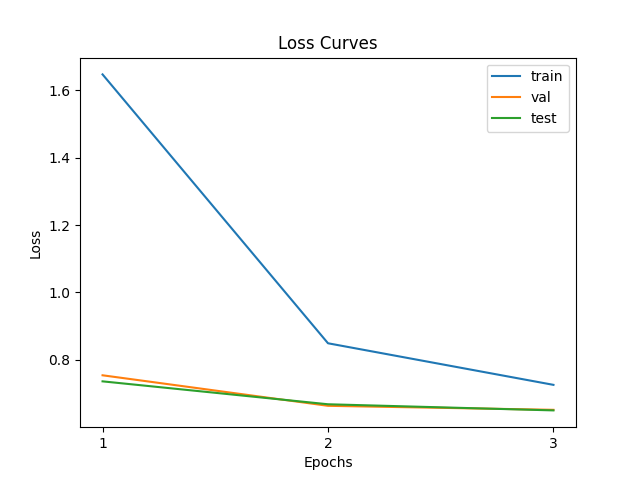

Unveiling the Power of Quantization and LoRa for Fine-Tuning Mistral 7B Model (LLM) on a Single Node GPU using Uber's Ludwig, by Siddharth vij

Kabir Brar (@_ksbrar_) / X

Ludwig v0.8: Open-source Toolkit to Build and Fine-tune Custom LLMs on Your Data - Predibase - Predibase

How to Fine-tune Mixtral 8x7b with Open-source Ludwig - Predibase - Predibase

Fine Tune mistral-7b-instruct on Predibase with Your Own Data and LoRAX, by Rany ElHousieny, Feb, 2024

Predibase on LinkedIn: #llms #engineers

Travis Addair on LinkedIn: #raysummit

Devvret Rishi on LinkedIn: Fine-Tune and Serve 100s of LLMs for the Cost of One with LoRAX

Devvret Rishi on LinkedIn: Fine-Tune and Serve 100s of LLMs for the Cost of One with LoRAX

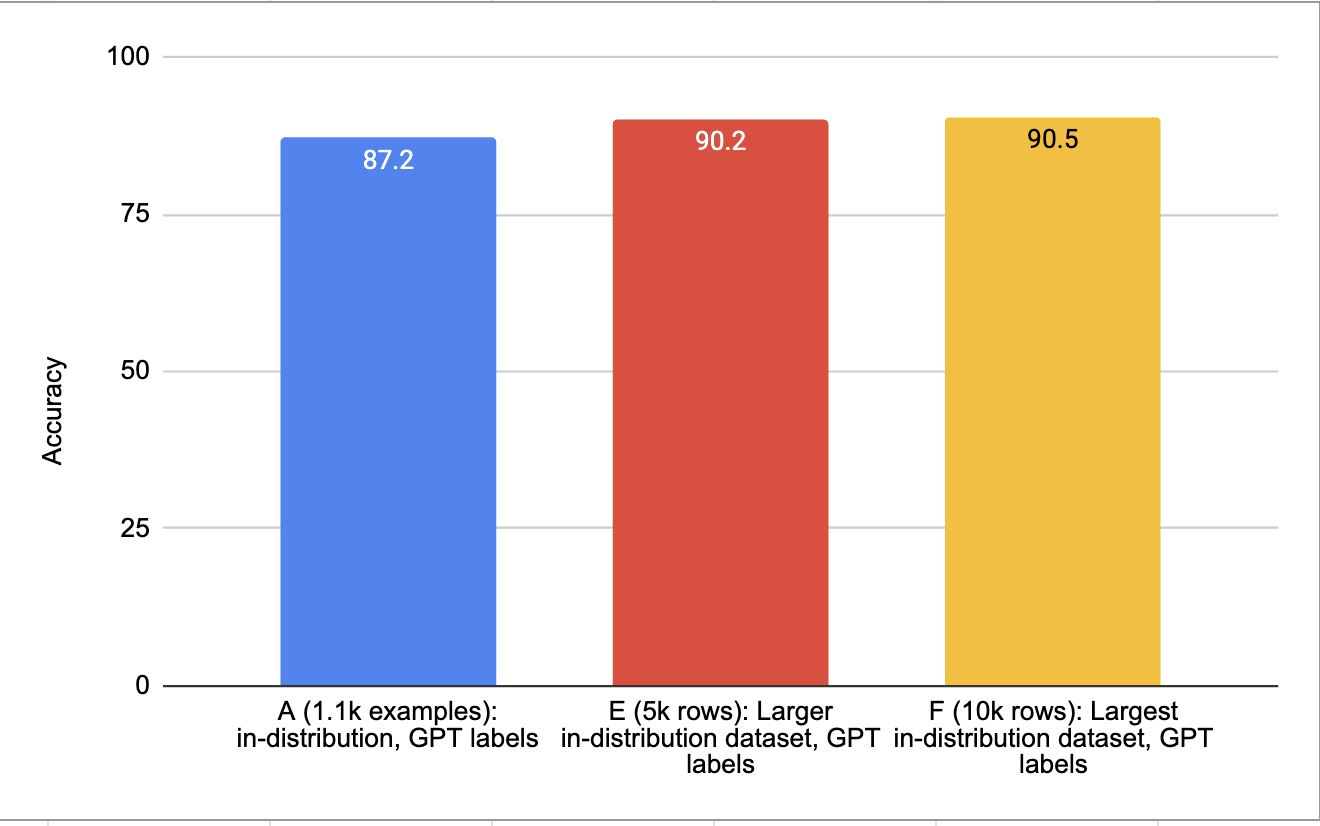

Graduate from OpenAI to Open-Source: 12 best practices for distilling smaller language models from GPT - Predibase - Predibase

How to Fine-tune Mixtral 8x7b with Open-source Ludwig - Predibase - Predibase

Deep Learning – Predibase