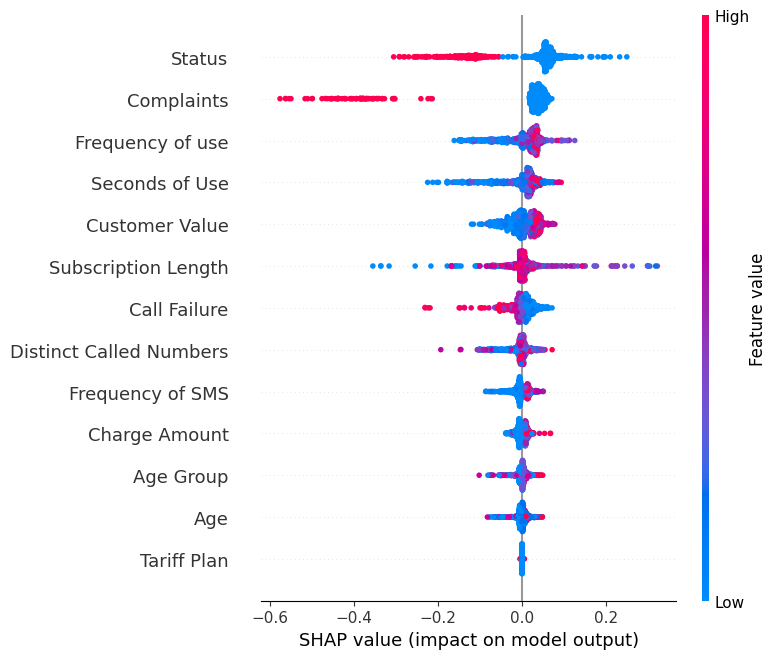

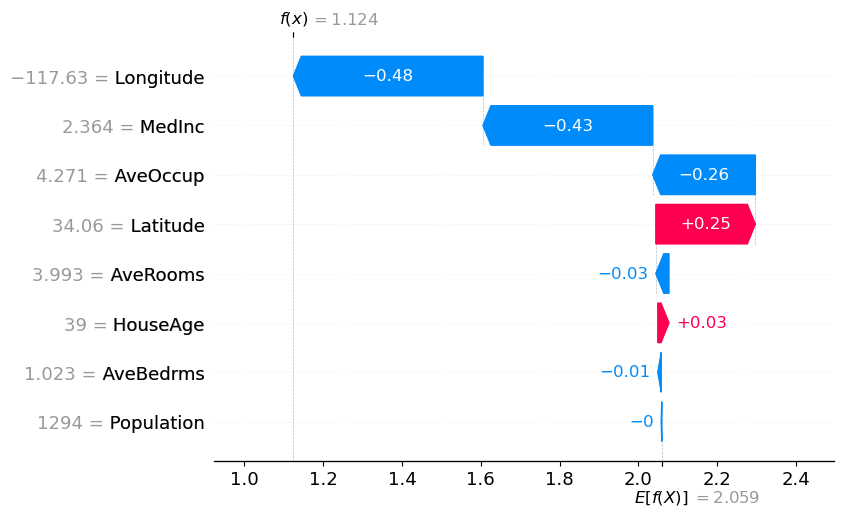

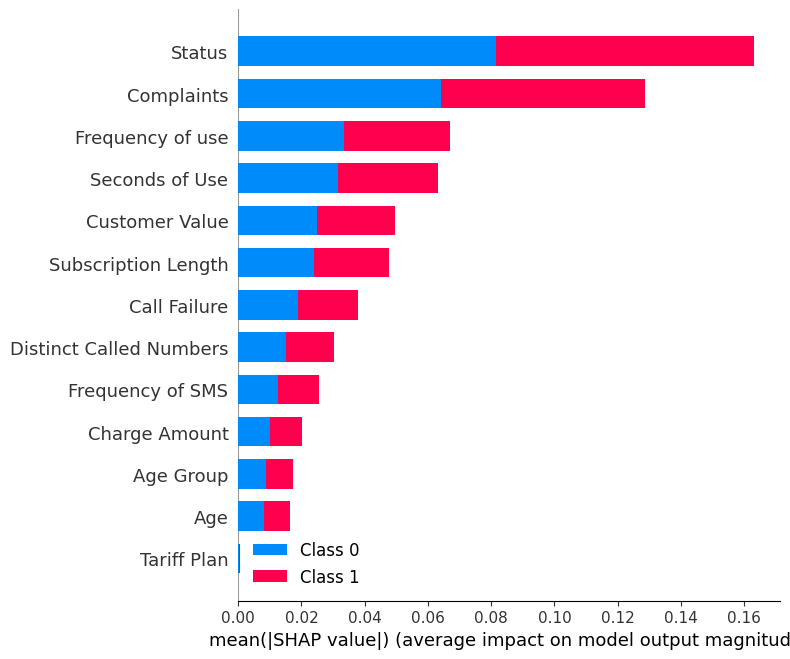

Using SHAP Values to Explain How Your Machine Learning Model Works

4.6

(351)

Write Review

More

$ 24.99

In stock

Description

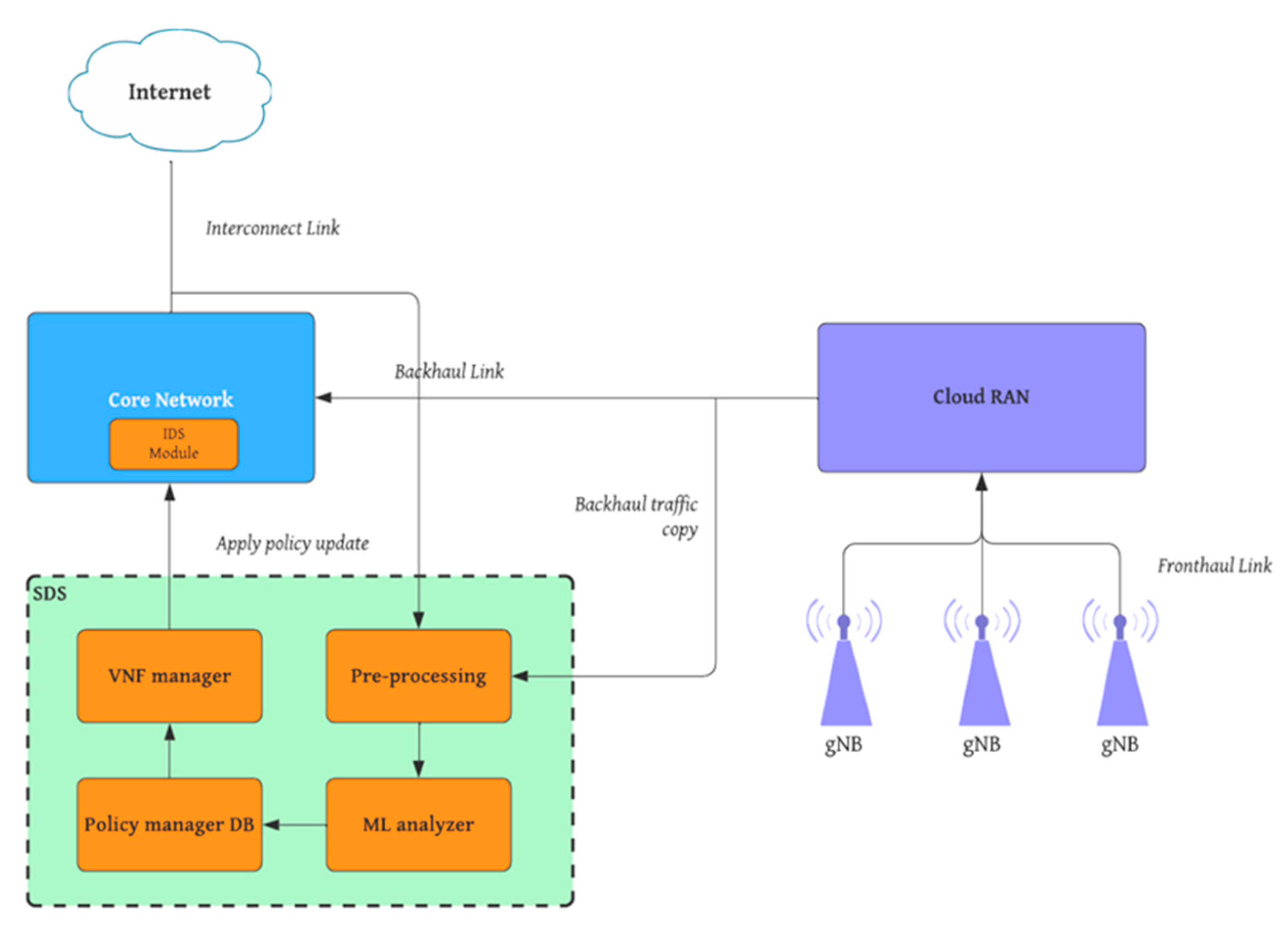

Sensors, Free Full-Text

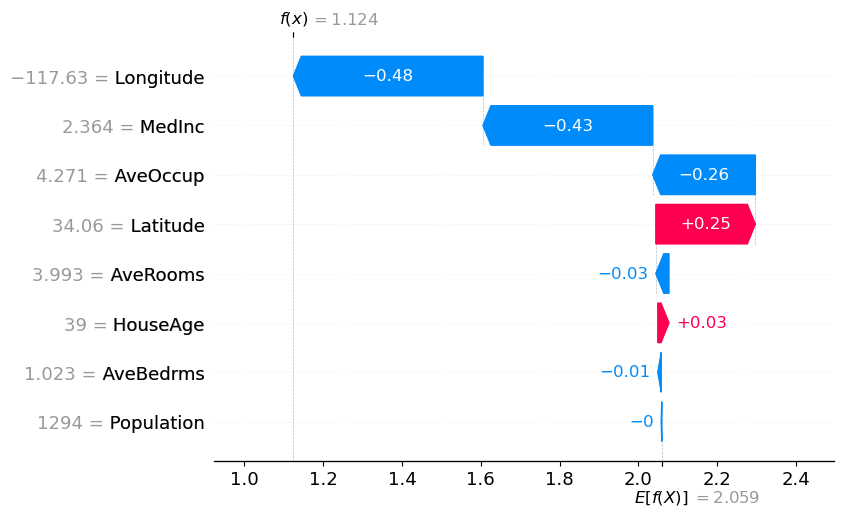

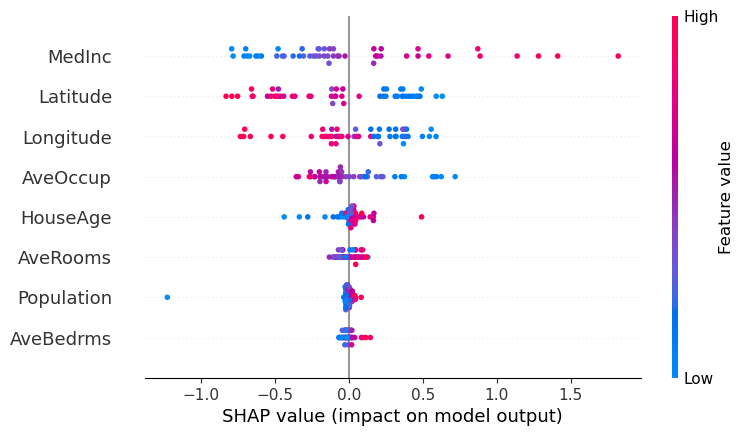

Using SHAP Values to Explain How Your Machine Learning Model Works, by Vinícius Trevisan

Using SHAP Values to Explain How Your Machine Learning Model Works, by Vinícius Trevisan

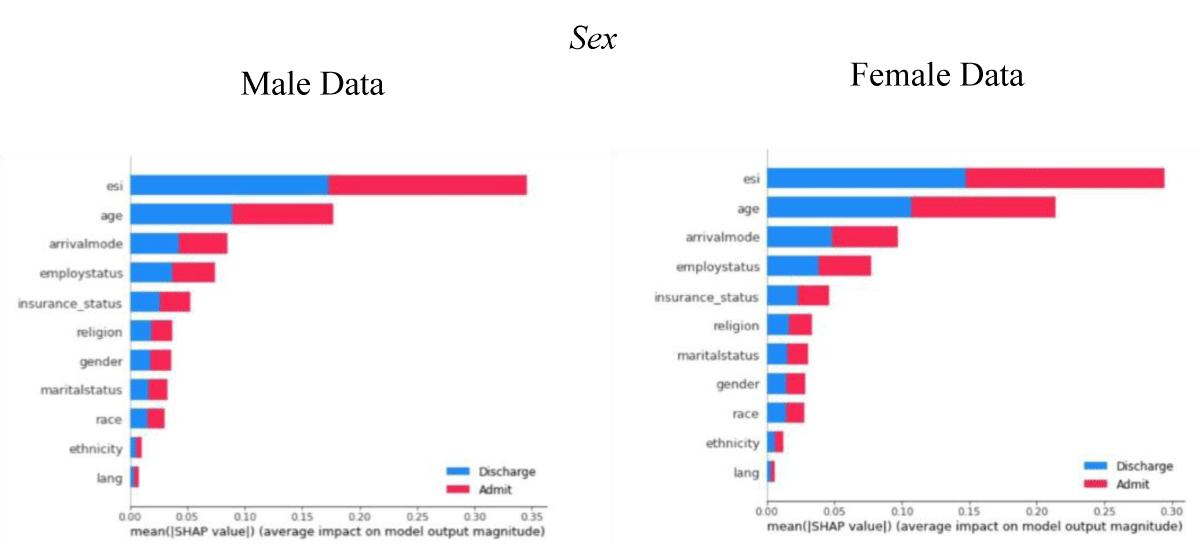

Using Model Classification to detect Bias in Hospital Triaging

A Game Theoretic Framework for Interpretable Student Performance Model

List: Feature Importance with SHAP, Curated by Lars ter Braak

An Introduction to SHAP Values and Machine Learning Interpretability

New Report: Risky Analysis: Assessing and Improving AI Governance Tools

An Introduction to SHAP Values and Machine Learning Interpretability