Pre-training vs Fine-Tuning vs In-Context Learning of Large

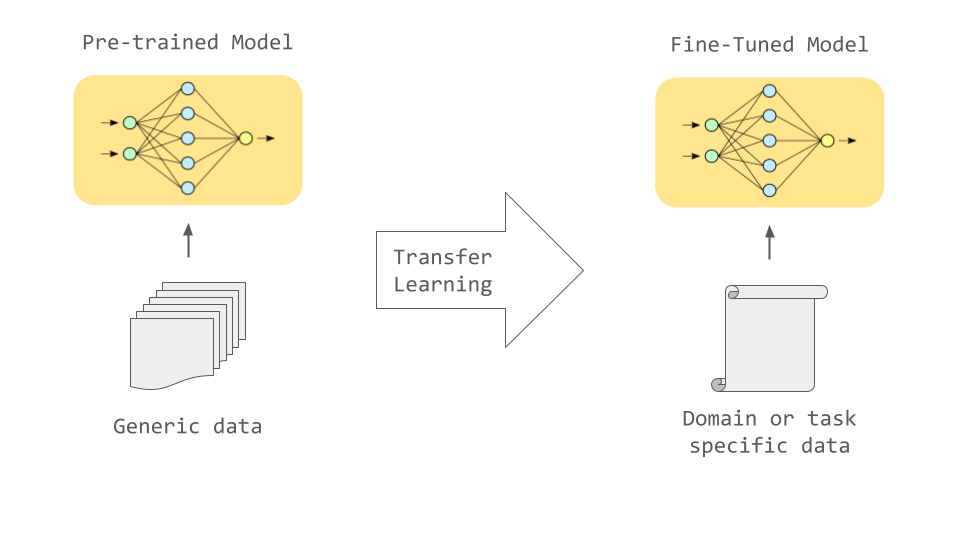

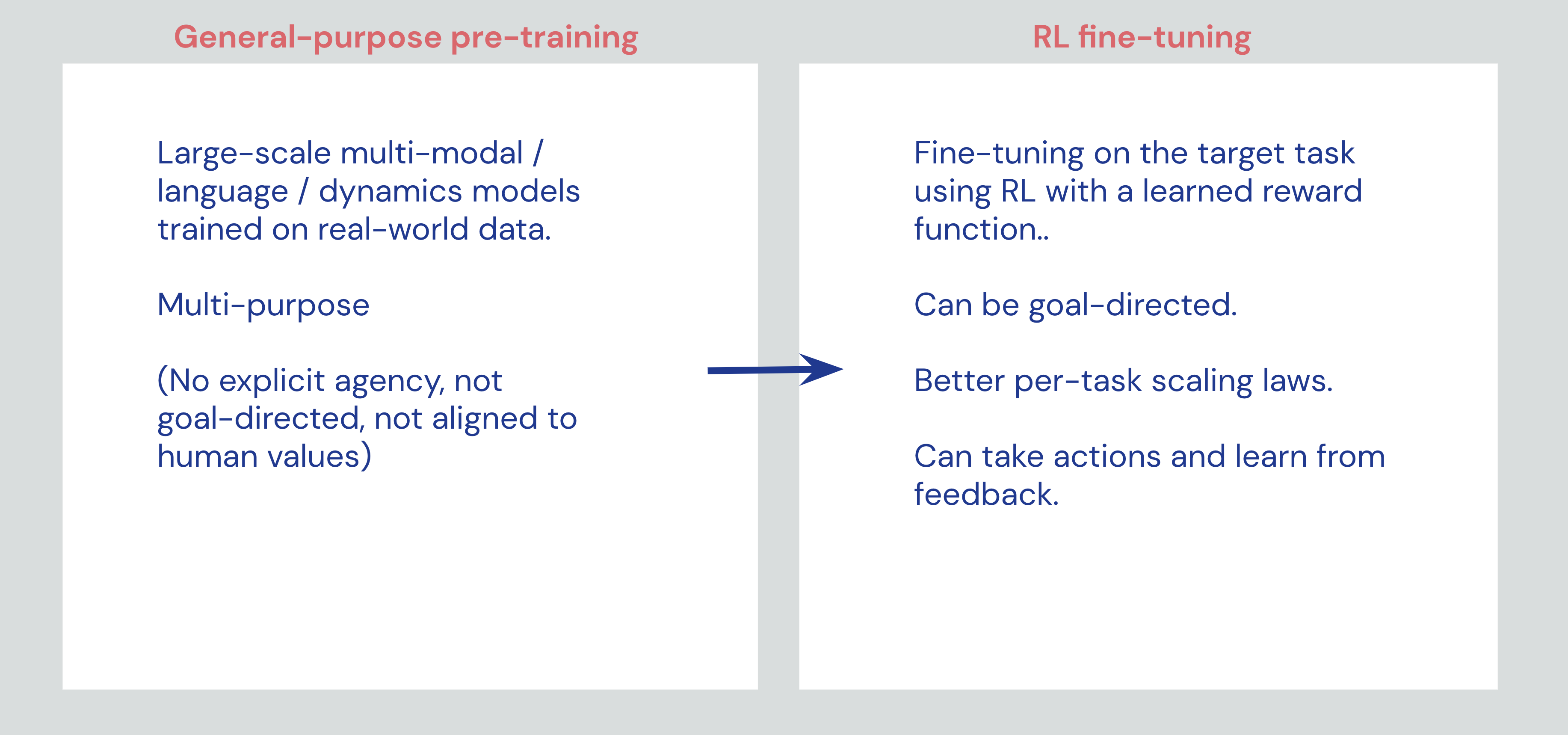

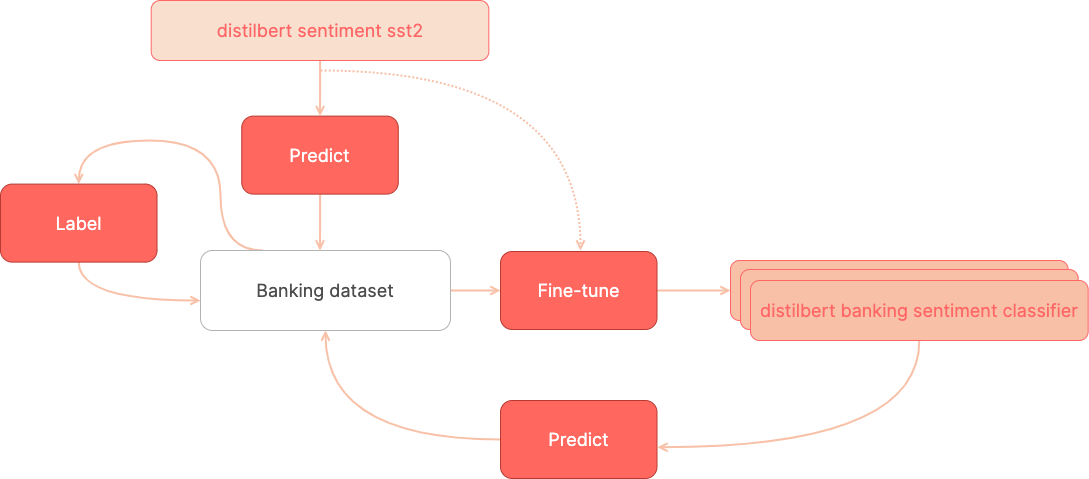

Large language models are first trained on massive text datasets in a process known as pre-training: gaining a solid grasp of grammar, facts, and reasoning. Next comes fine-tuning to specialize in particular tasks or domains. And let's not forget the one that makes prompt engineering possible: in-context learning, allowing models to adapt their responses on-the-fly based on the specific queries or prompts they are given.

A Deep-Dive into Fine-Tuning of Large Language Models, by Pradeep Menon

BERT Explained Papers With Code

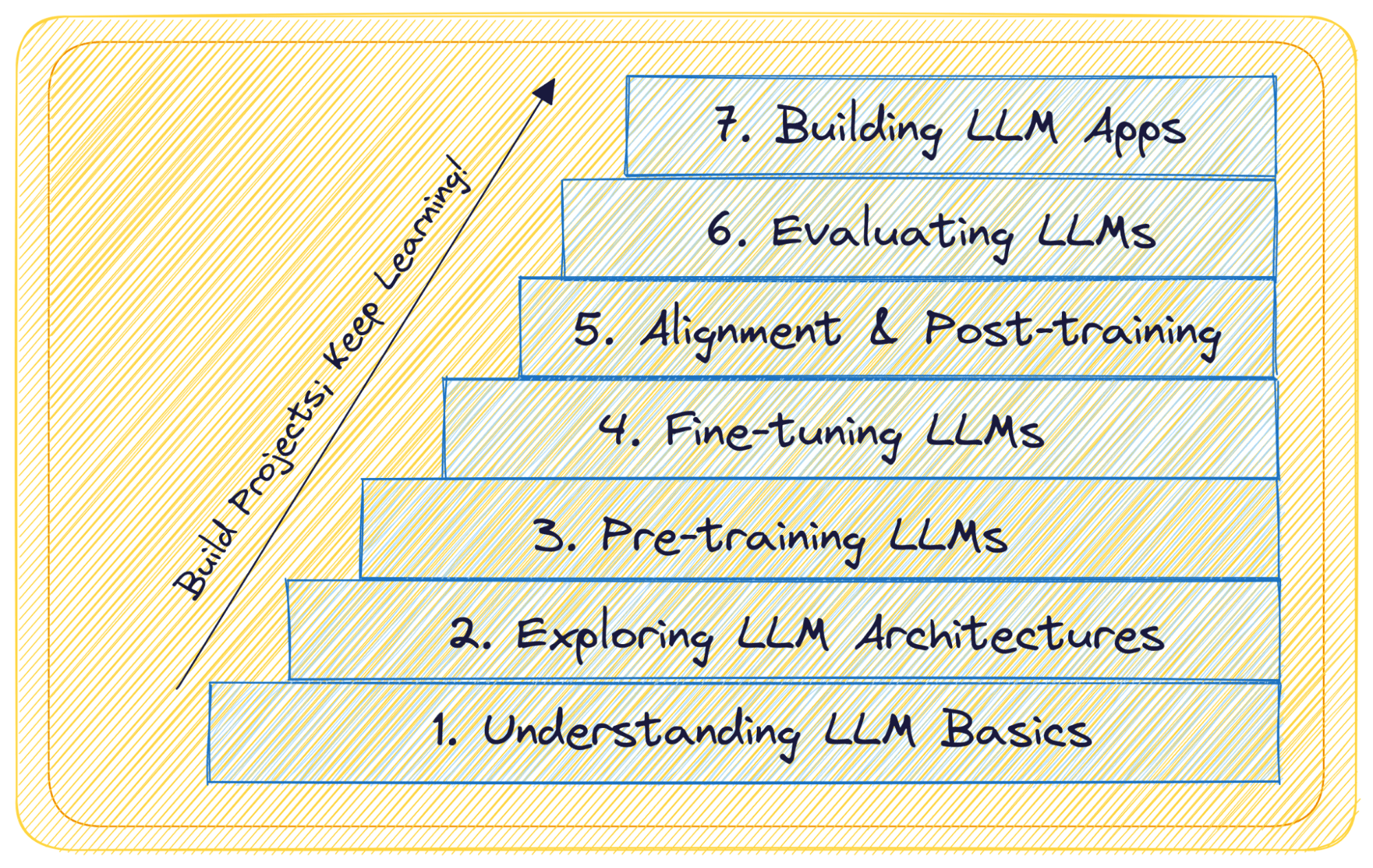

7 Steps to Mastering Large Language Models (LLMs) - KDnuggets

Fine-tuning large language models (LLMs) in 2024

Recent Advances in Language Model Fine-tuning

The Ultimate Guide to LLM Fine Tuning: Best Practices & Tools

Large Language Models: An Introduction to Fine-Tuning and Specialization in LLMs

Mastering Generative AI Interactions: A Guide to In-Context Learning and Fine-Tuning

Articles Entry Point AI

A Beginner's Guide to Fine-Tuning Large Language Models

The overview of our pre-training and fine-tuning framework.