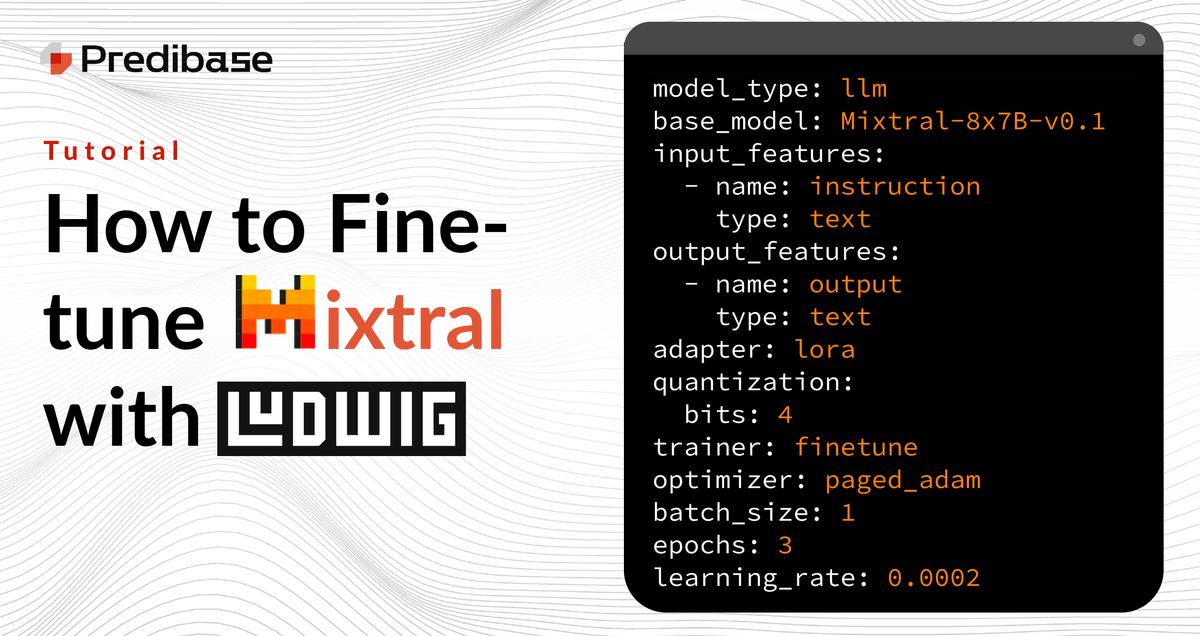

How to Fine-tune Mixtral 8x7b with Open-source Ludwig - Predibase

Learn how to reliably and efficiently fine-tune Mixtral 8x7B on commodity hardware in just a few lines of code with Ludwig, the open-source framework for building custom LLMs. This short tutorial provides code snippets to help get you started.

2023 December Newsletter - Predibase - Predibase

Graduate from OpenAI to Open-Source: 12 best practices for

Fine-tune Mixtral 8x7b on AWS SageMaker and Deploy to RunPod

Arnav Garg (@grg_arnav) / X

Ludwig (@ludwig_ai) / X

Deep Learning – Predibase

Little Bear Labs on LinkedIn: LoRAX: The Open Source Framework for

.png?width=1000&height=563&name=Predibase%20Cover%20Image%20(2).png)

Live Interactive Demo featuring Predibase

Fine Tune mistral-7b-instruct on Predibase with Your Own Data and

Predibase on LinkedIn: #llms #deeplearning #ai #mixtral #lorax

GitHub - predibase/llm_distillation_playbook: Best practices for

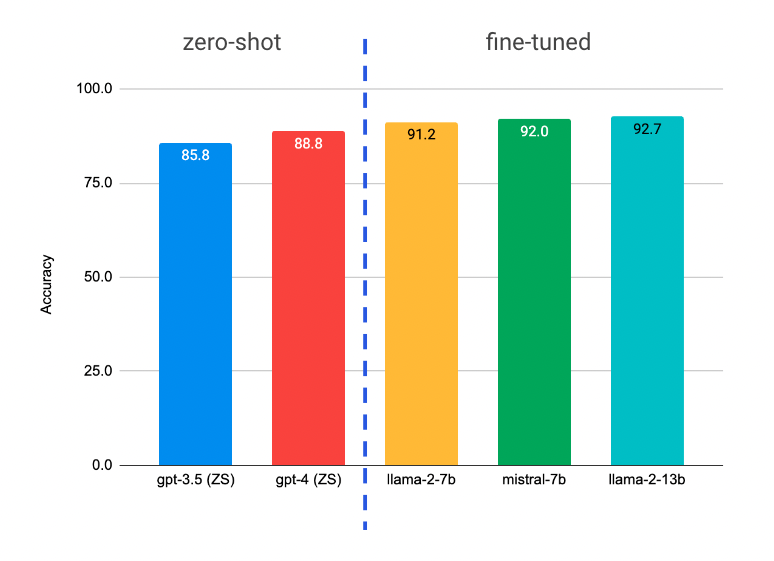

Fine-tuning Mistral 7B on a Single GPU with Ludwig - Predibase