RAG vs Finetuning - Your Best Approach to Boost LLM Application.

4.5

(386)

Write Review

More

$ 11.99

In stock

Description

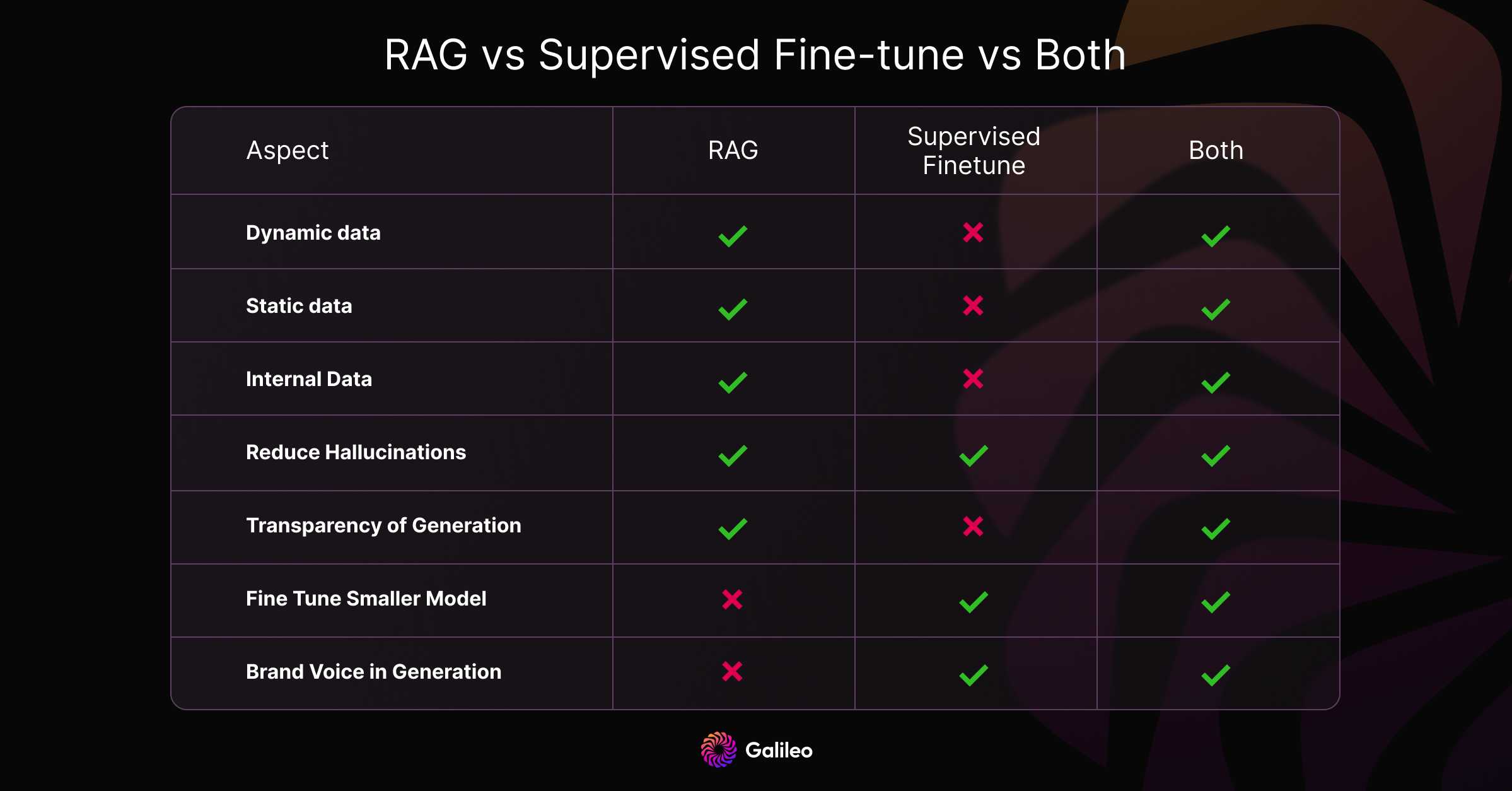

There are two main approaches to improving the performance of large language models (LLMs) on specific tasks: finetuning and retrieval-based generation. Finetuning involves updating the weights of an LLM that has been pre-trained on a large corpus of text and code.

What is RAG? A simple python code with RAG like approach

Finetuning LLM

RAG vs Finetuning - Your Best Approach to Boost LLM Application.

Issue 13: LLM Benchmarking

Issue 24: The Algorithms behind the magic

50 excellent ChatGPT prompts specifically tailored for programmers

Controlling Packets on the Wire: Moving from Strength to Domination

Accelerating technological changes - Holodeck by Midjourney CEO

Language Embeddings

MedPaLM vs ChatGPT - First do no harm

Related products

You may also like