GitHub - bytedance/effective_transformer: Running BERT without Padding

Running BERT without Padding. Contribute to bytedance/effective_transformer development by creating an account on GitHub.

2211.05102] 1 Introduction

PDF) Packing: Towards 2x NLP BERT Acceleration

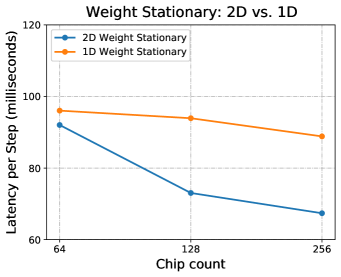

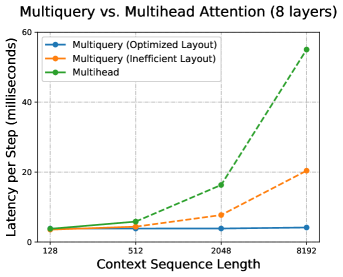

PDF) Efficiently Scaling Transformer Inference

2211.05102] 1 Introduction

In built code not able to download for bert-base-uncased when running on cluster. · Issue #8137 · huggingface/transformers · GitHub

BART finetune.py: model not learning anything · Issue #5271 · huggingface/transformers · GitHub

Tokenizing in the dataset and padding manually using tokenizer.pad in the collator · Issue #12307 · huggingface/transformers · GitHub

What to do about this warning message: Some weights of the model checkpoint at bert-base-uncased were not used when initializing BertForSequenceClassification · Issue #5421 · huggingface/transformers · GitHub

Why only use pre-trained BERT Tokenizer but not the entire pre-trained BERT model(including the pre-trained encoder)? · Issue #115 · CompVis/latent-diffusion · GitHub

BertForSequenceClassification.from_pretrained · Issue #22 · jessevig/bertviz · GitHub