Prompt Compression: Enhancing Inference and Efficiency with LLMLingua - Goglides Dev 🌱

Let's start with a fundamental concept and then dive deep into the project: What is Prompt Tagged with promptcompression, llmlingua, rag, llamaindex.

LLMLingua: Innovating LLM efficiency with prompt compression - Microsoft Research

PDF] Prompt Compression and Contrastive Conditioning for Controllability and Toxicity Reduction in Language Models

Goglides Dev 🌱 - All posts

Goglides Dev 🌱 - Latest posts

LLMLingua: Prompt Compression makes LLM Inference Supercharged 🚀

Goglides Dev 🌱 - Latest posts

Goglides Dev 🌱 - All posts

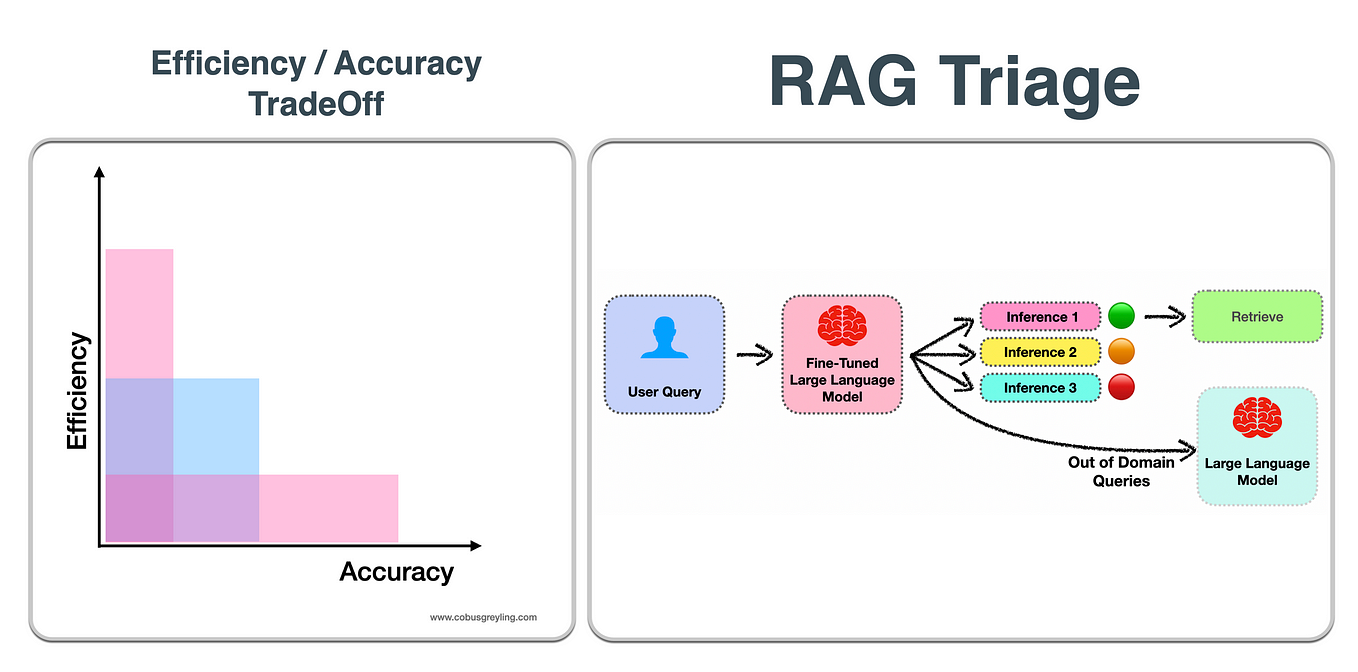

LLM Drift, Prompt Drift & Cascading, by Cobus Greyling, Feb, 2024

PDF] Prompt Compression and Contrastive Conditioning for Controllability and Toxicity Reduction in Language Models

Vinija's Notes • Primers • Prompt Engineering

LLMLingua: Innovating LLM efficiency with prompt compression - Microsoft Research

Save Money in Using GPT-4 by Compressing Prompt 20 times !

PDF] Prompt Compression and Contrastive Conditioning for Controllability and Toxicity Reduction in Language Models