MPT-30B: Raising the bar for open-source foundation models

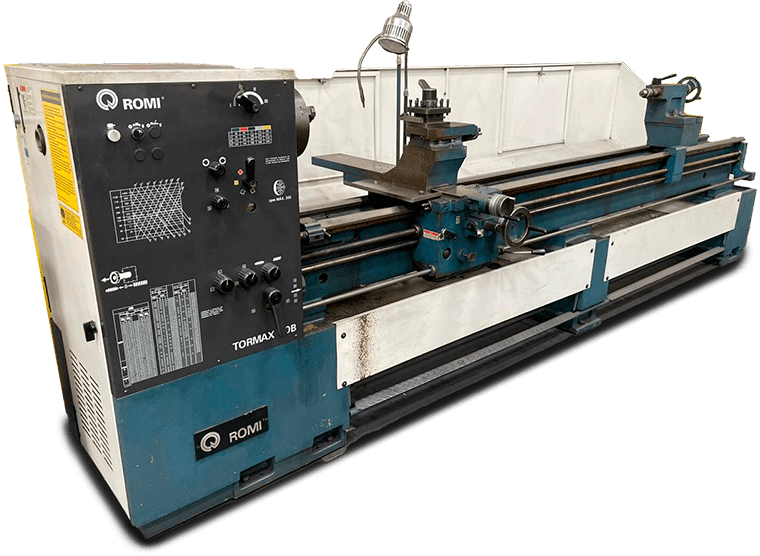

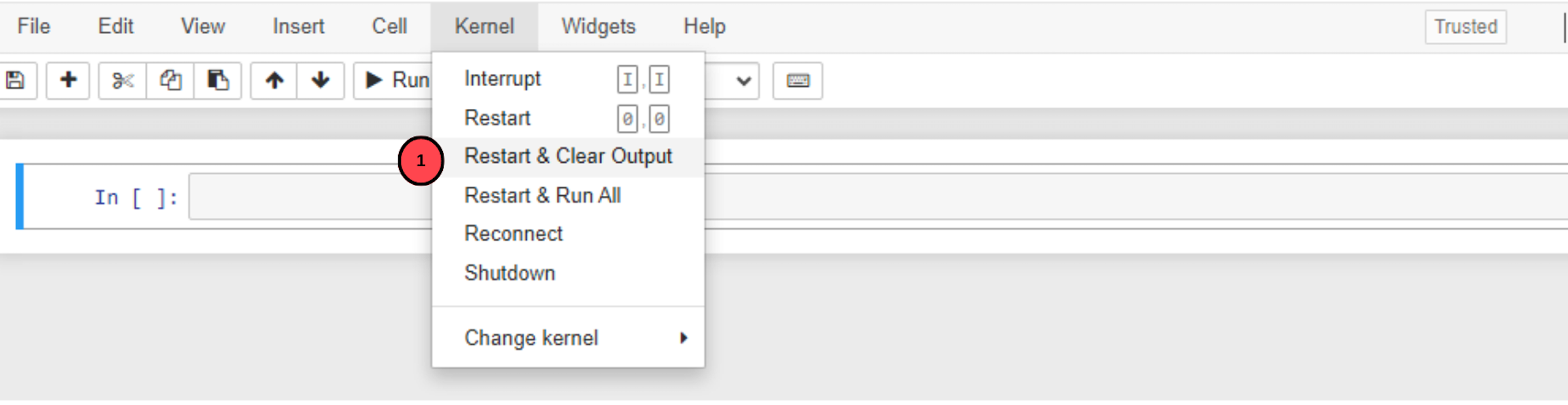

Introducing MPT-30B, a new, more powerful member of our Foundation Series of open-source models, trained with an 8k context length on NVIDIA H100 Tensor Core GPUs.

How to Use MosaicML MPT Large Language Model on Vultr Cloud GPU

The History of Open-Source LLMs: Better Base Models (Part Two)

The List of 11 Most Popular Open Source LLMs of 2023 Lakera – Protecting AI teams that disrupt the world.

MosaicML, now part of Databricks! on X: MPT-30B is a bigger sibling of MPT-7B, which we released a few weeks ago. The model arch is the same, the data mix is a

Meet MPT-7B: The Game-Changing Open-Source/Commercially Viable Foundation Model from Mosaic ML, by Sriram Parthasarathy

MPT-30B: Raising the bar for open-source foundation models : r/LocalLLaMA

MosaicML's latest models outperform GPT-3 with just 30B parameters

Google Colab で MPT-30B を試す|npaka

12 Open Source LLMs to Watch

MPT-30B: Raising the bar for open-source foundation models : r/LocalLLaMA

Benchmarking Large Language Models on NVIDIA H100 GPUs with CoreWeave (Part 1)