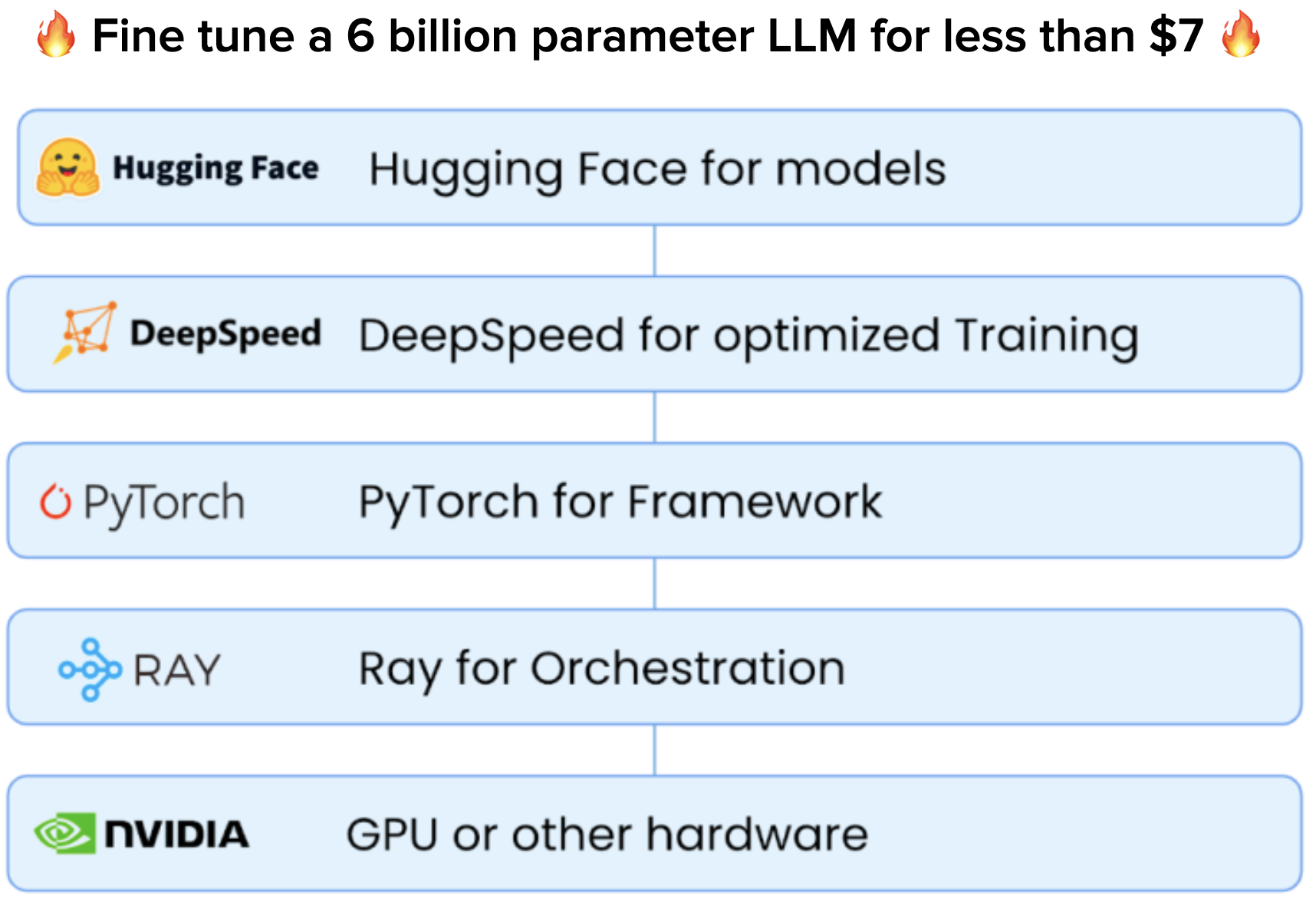

How to Fine-Tune a 6 Billion Parameter LLM for Less Than $7

In part 4 of our Generative AI series, we share how to build a system for fine-tuning & serving LLMs in 40 minutes or less.

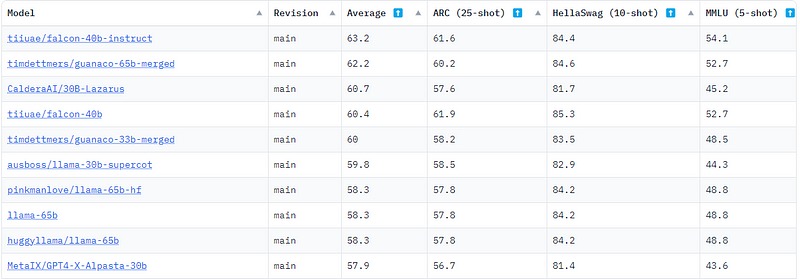

Fine-Tuning the Falcon LLM 7-Billion Parameter Model on Intel

How Smaller LLMs Could Slash Costs of Generative AI

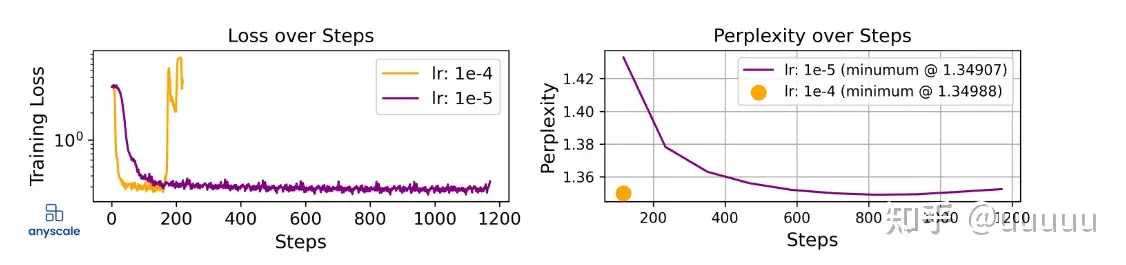

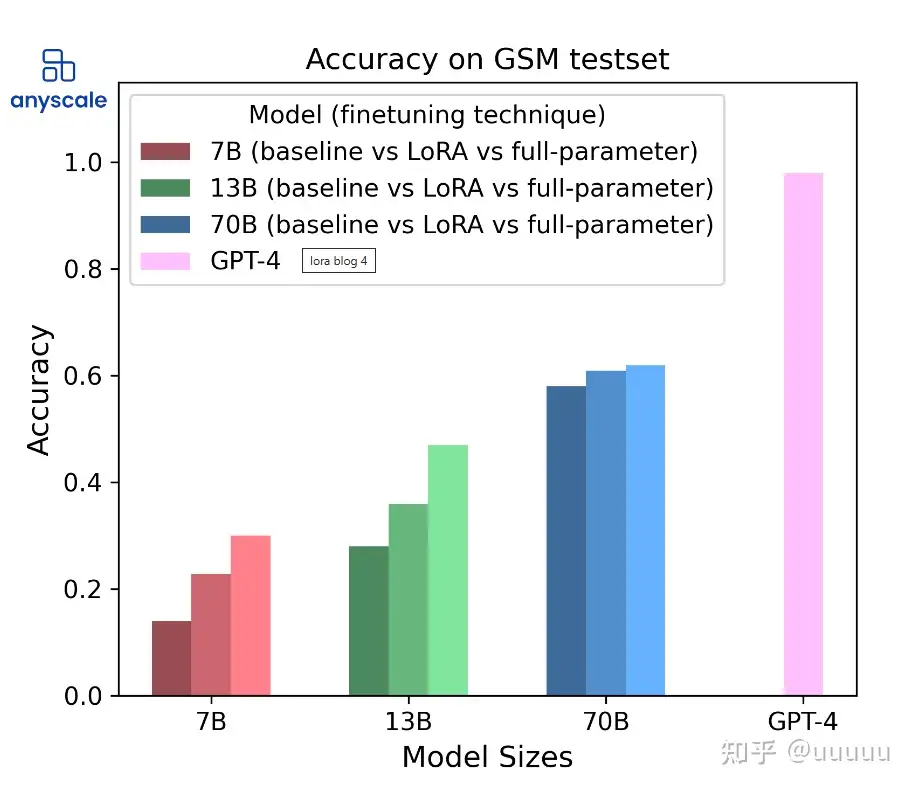

大模型LLM微调的碎碎念- 知乎

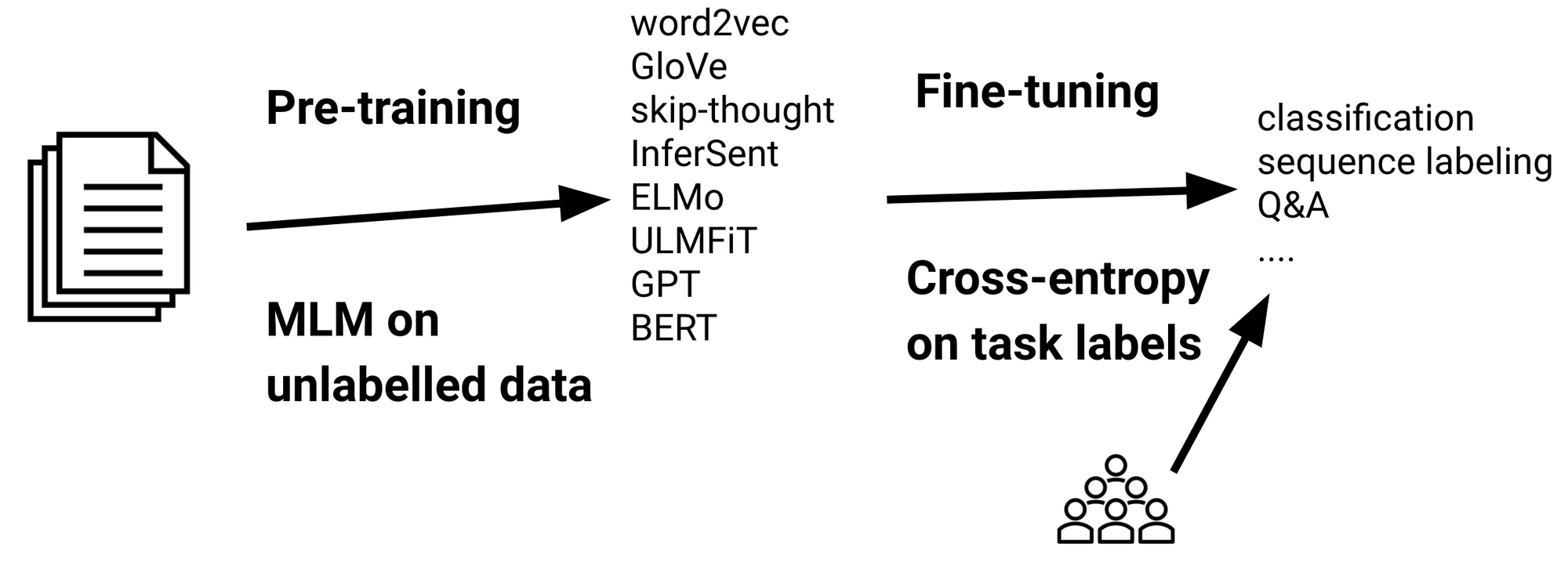

Fine-tuning methods of Large Language models

大模型LLM微调的碎碎念- 知乎

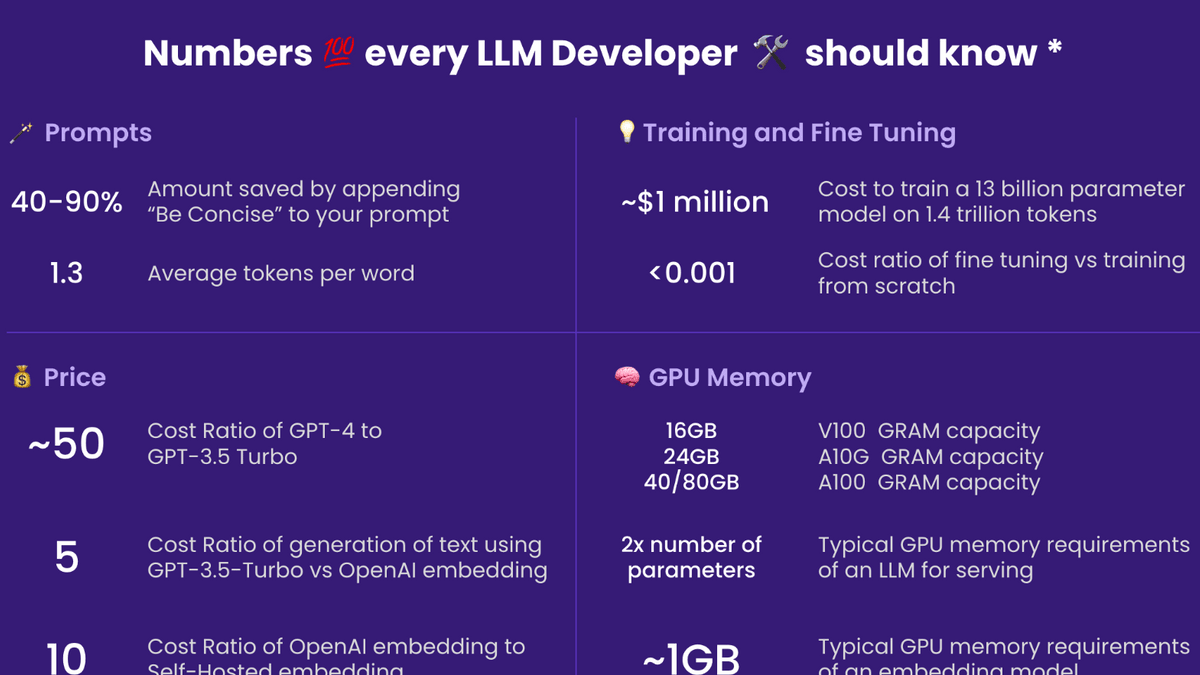

Various Numbers Good to Know for Developers of Large Language Models - GIGAZINE

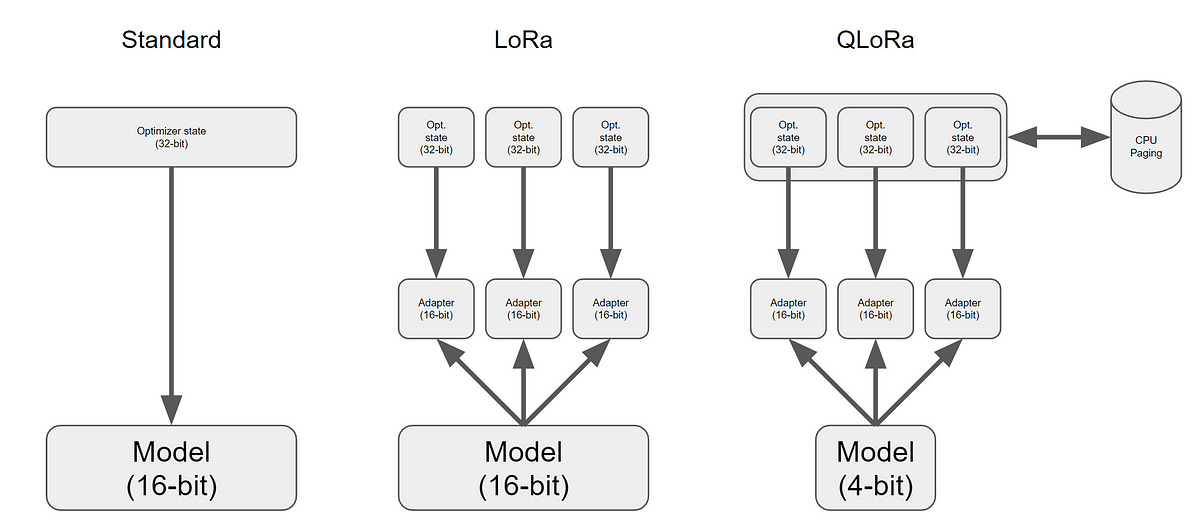

Summary Of Adapter Based Performance Efficient Fine Tuning (PEFT) Techniques For Large Language Models

Fine Tuning Large Language Models: A Complete Guide to Building an LLM

Andrei-Alexandru Tulbure on LinkedIn: Google launches two new open

QLoRA: Fine-Tune a Large Language Model on Your GPU

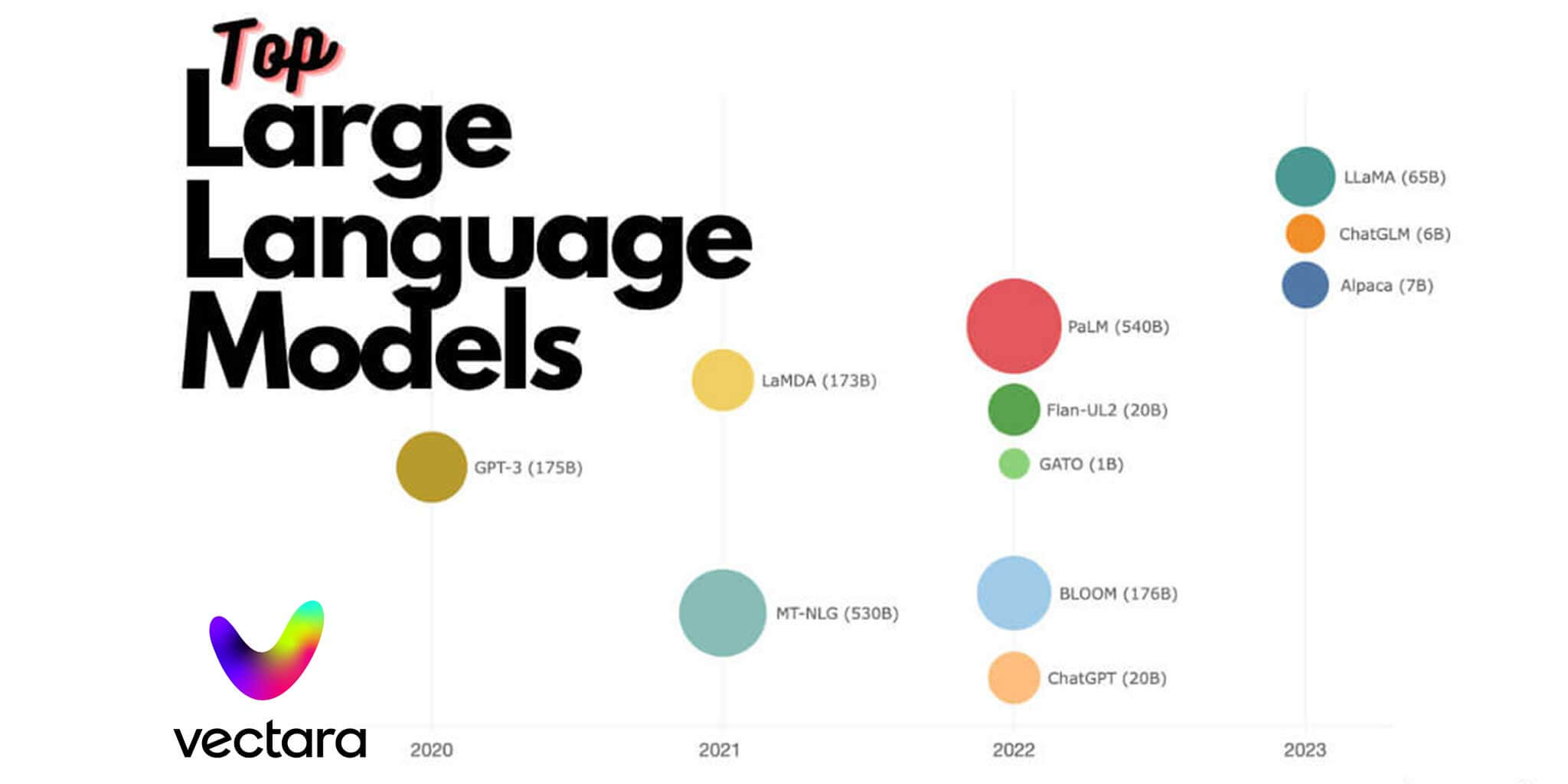

Top Large Language Models (LLMs): GPT-4, LLaMA 2, Mistral 7B, ChatGPT, and More - Vectara

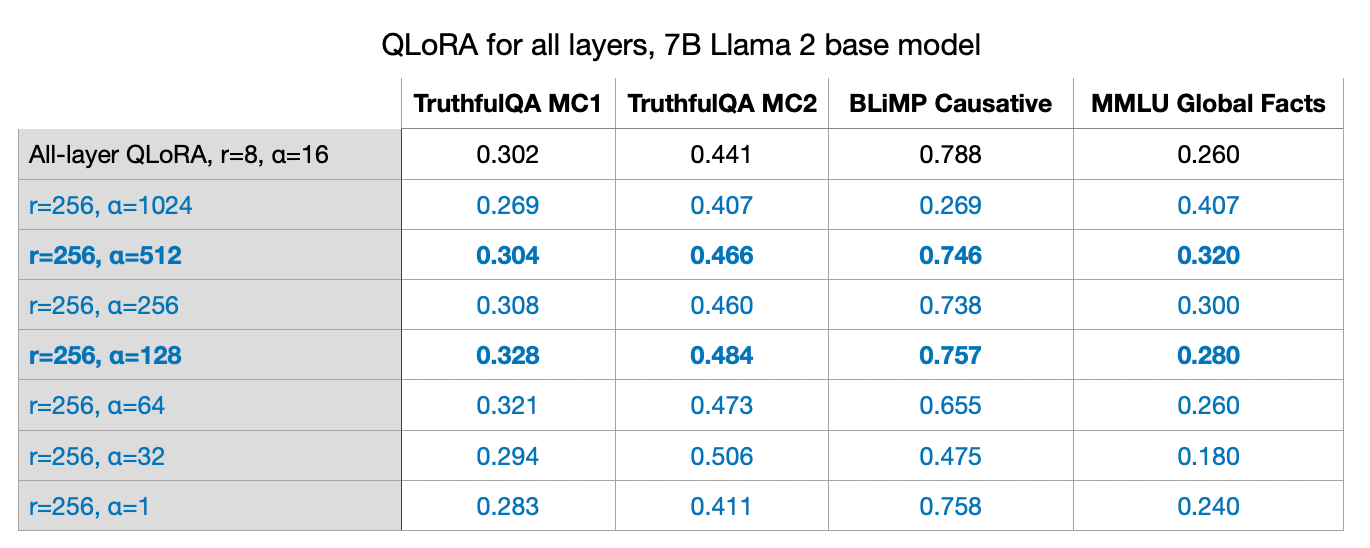

Practical Tips for Finetuning LLMs Using LoRA (Low-Rank Adaptation)

Few-Shot Parameter-Efficient Fine-Tuning is Better and Cheaper than In-Context Learning

Training and fine-tuning large language models - Borealis AI

Tanay Chowdhury on LinkedIn: Video generation models as world