Voice Assistants Have a Variety of Underlooked Vulnerabilities

New type of attack on voice assistants uses ultrasonic waves to access the devices through solid surfaces that are inaudible to humans without the use of special equipment.

News, insights and resources for data protection, privacy and cyber security leaders

Conversational AI and Quality Assurance: A complete guide

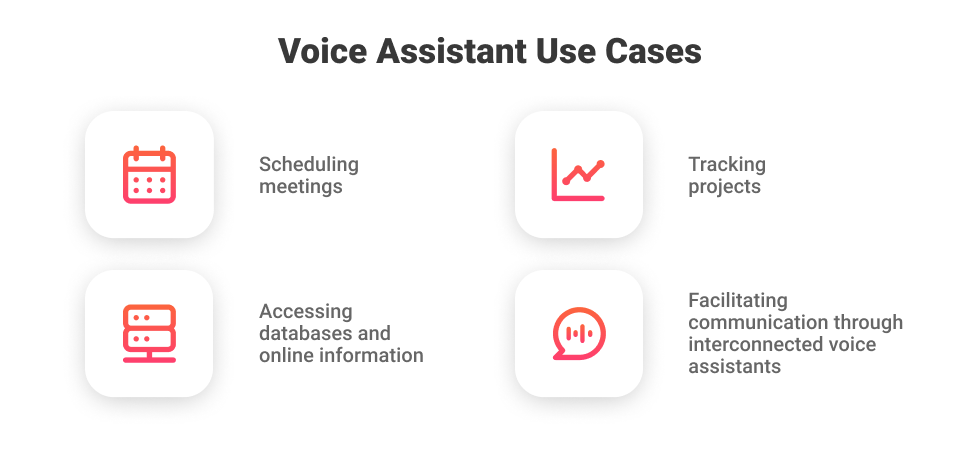

How AI Voice Assistants Are Transforming The Enterprise

What About Devices with Personal Voice Assistants?

A Look into the Vulnerability of Voice Assisted IoT

Hey Siri: What Should Users Know About Voice-Controlled Tech?

Types of AI-based Voice Assistants

Your Privacy May Feel Safer at Home – But Your Smart Speaker Is

Researchers exploit vulnerabilities of smart device microphones and voice assistants

Flawless Deepfake Audio Is a Serious Security Concern

What can go wrong when using voice assistants? Add your scenarios, by Maarten Lens-FitzGerald

Rutgers Professor Creates App to Secure Virtual Assistants from Hacking

Voice Assistants & Voice Computing – Cyber Security and Avoiding Risk - MiCOM Labs

New Study Unveils Hidden Vulnerabilities in AI

Voice Assistant: Best Practices & Limitations