Using SHAP Values to Explain How Your Machine Learning Model Works, by Vinícius Trevisan

Medium

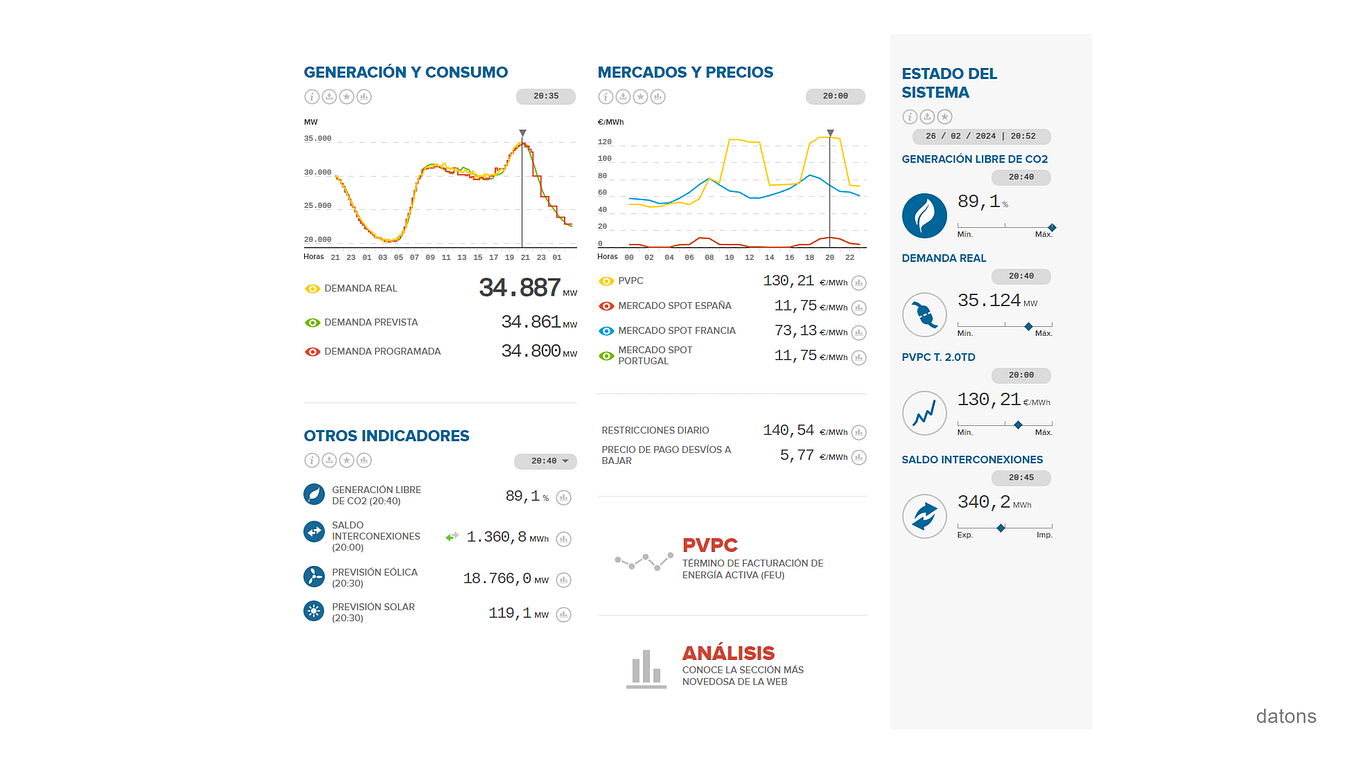

Introduction to Explainable AI (Explainable Artificial Intelligence or XAI) - 10 Senses

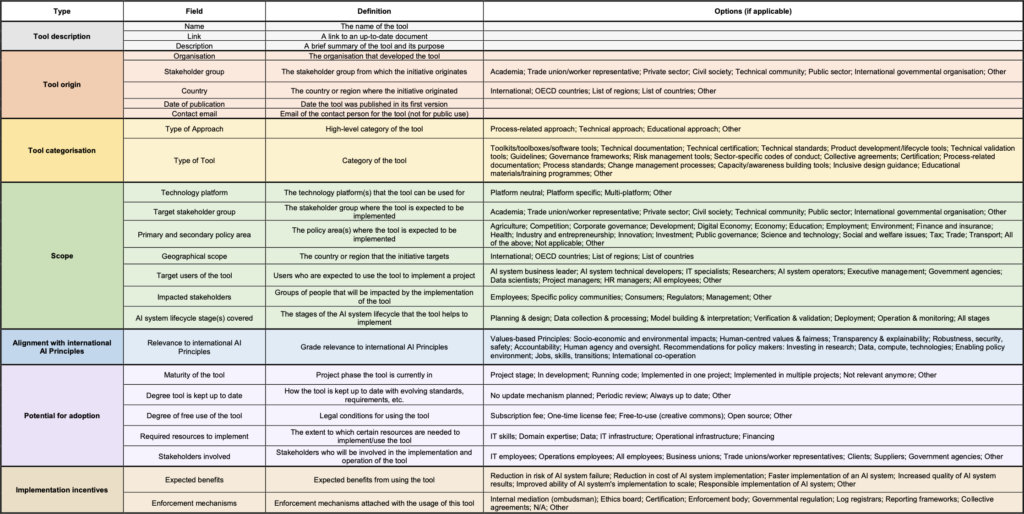

New Report: Risky Analysis: Assessing and Improving AI Governance Tools

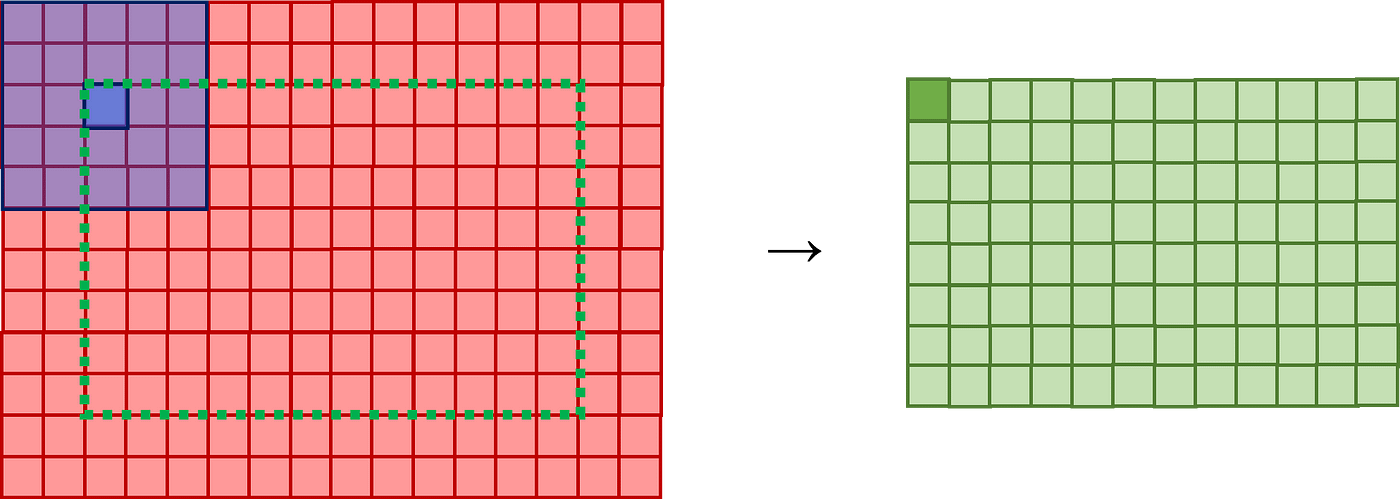

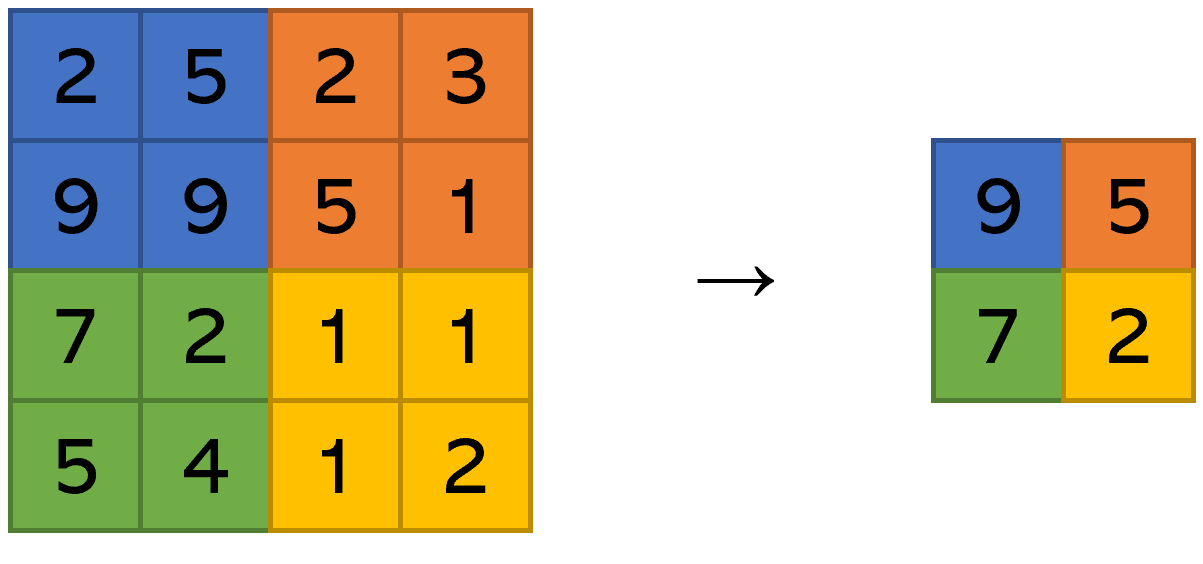

Understanding Convolutional Neural Networks (CNNs), by Vinícius Trevisan

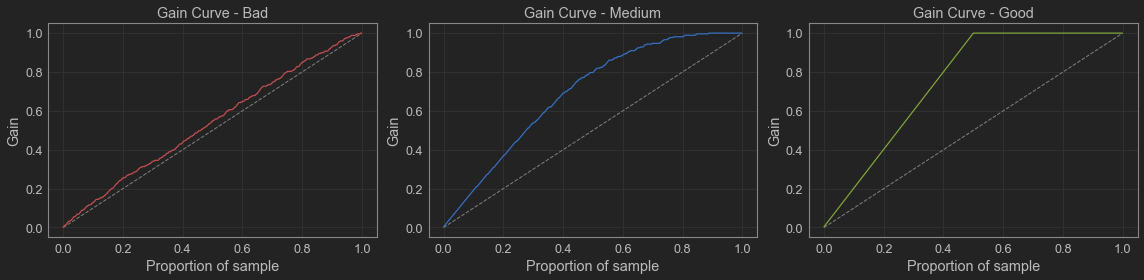

Evaluating the potential return of a model with Lift, Gain, and Decile Analysis, by Vinícius Trevisan

Boruta SHAP: A Tool for Feature Selection Every Data Scientist Should Know, by Vinícius Trevisan

Is your ML model stable? Checking model stability and population drift with PSI and CSI, by Vinícius Trevisan

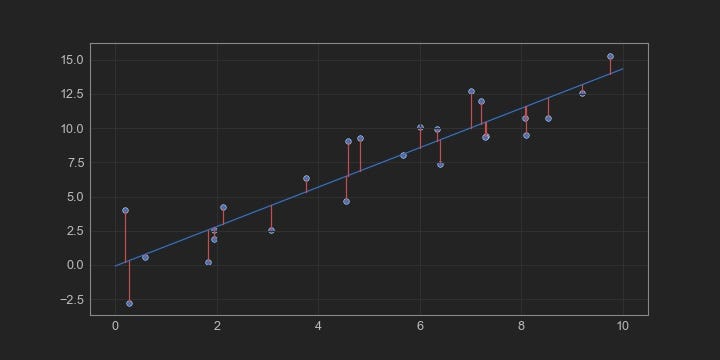

Comparing Robustness of MAE, MSE and RMSE, by Vinícius Trevisan

Is it correct to put the test data in the to produce the shapley values? I believe we should use the training data as we are explaining the model, which was configured

Comparing sample distributions with the Kolmogorov-Smirnov (KS) test, by Vinícius Trevisan

Comparing Robustness of MAE, MSE and RMSE, by Vinícius Trevisan

Understanding Convolutional Neural Networks (CNNs), by Vinícius Trevisan