Fitting AI models in your pocket with quantization - Stack Overflow

Model Quantization 1: Basic Concepts, by Florian June

neural network - Does static quantization enable the model to feed a layer with the output of the previous one, without converting to fp (and back to int)? - Stack Overflow

generative AI - Stack Overflow

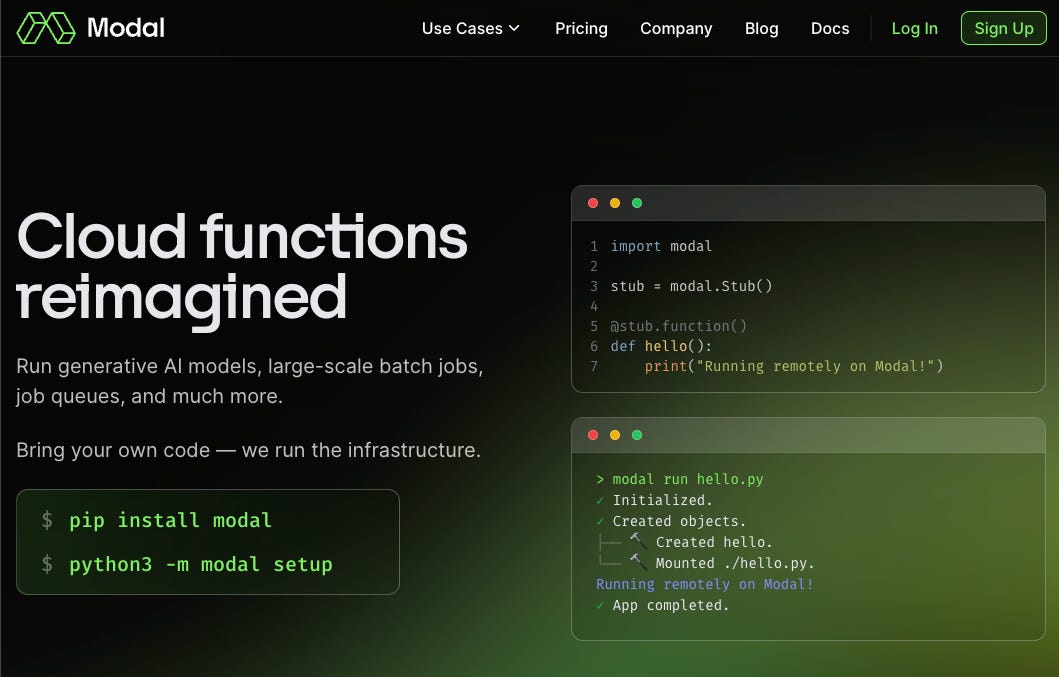

Truly Serverless Infra for AI Engineers - with Erik Bernhardsson of Modal

Fitting AI models in your pocket with quantization - Stack Overflow

Navigating the Intricacies of LLM Inference & Serving - Gradient Flow

How to improve my knowledge and skills in artificial intelligence - Quora

How is the deep learning model (its structure, weights, etc.) being stored in practice? - Quora

generative AI - Stack Overflow

Is Tensorflow considered a Blackbox solution? - Quora

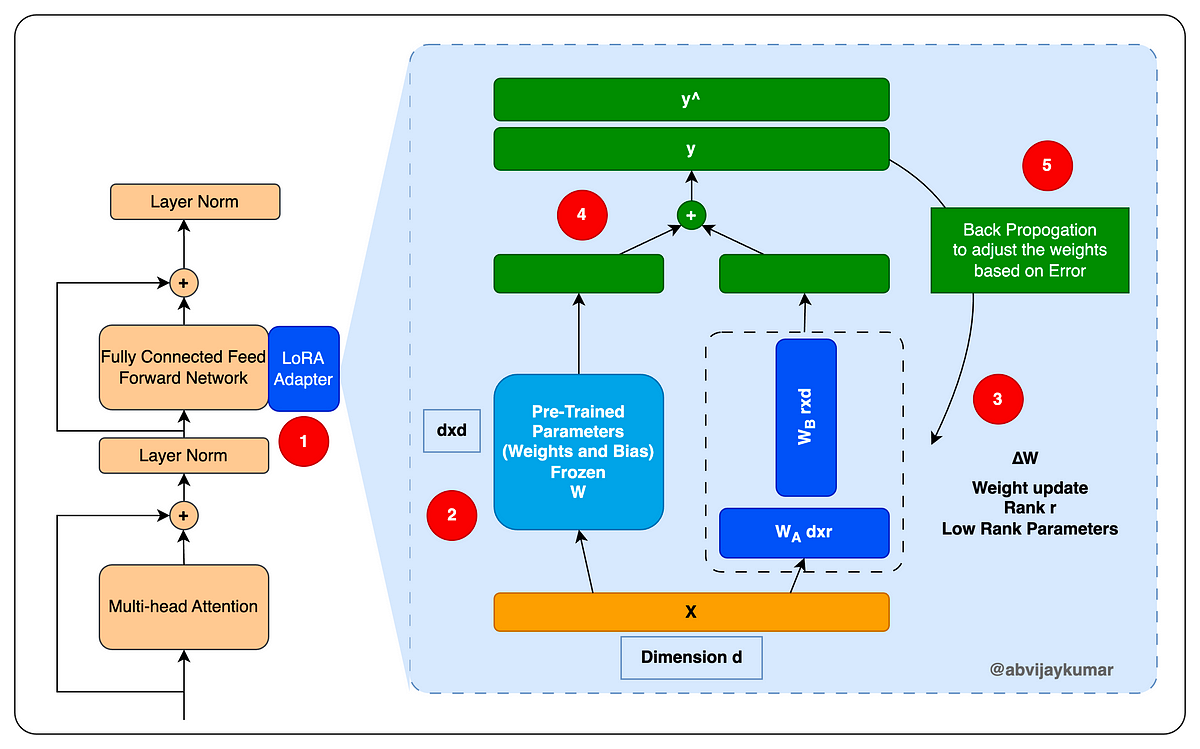

Fine Tuning LLM: Parameter Efficient Fine Tuning (PEFT) — LoRA & QLoRA — Part 1, by A B Vijay Kumar

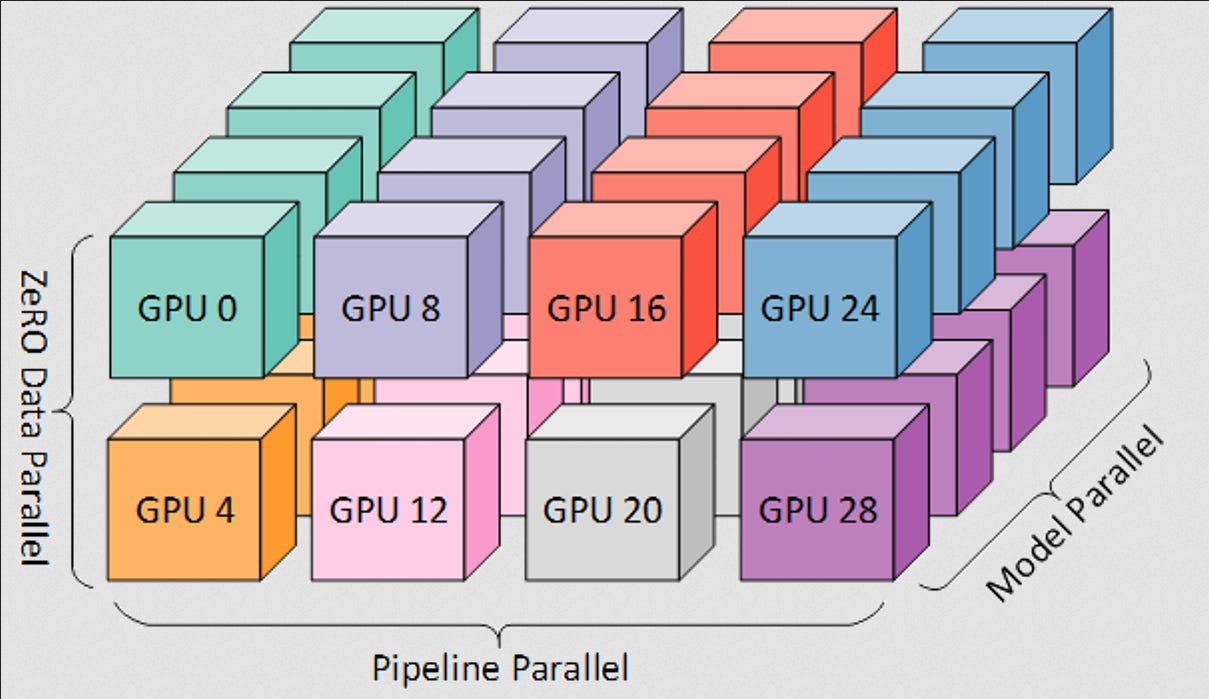

The Mathematics of Training LLMs — with Quentin Anthony of Eleuther AI

What is your experience with artificial intelligence, and can you

The New Era of Efficient LLM Deployment - Gradient Flow